中秋明月,豪门有,贫家也有。极慰人心。 ——烽火戏诸侯《剑来》

写在前面

学习k8s这里整理记忆

博文内容涉及:

LivenessProbe,ReadinessProbe两种探针的一些基本理论ExecAction,TCPSocketAction,HTTPGetAction三种检测方式的Demo

中秋明月,豪门有,贫家也有。极慰人心。 ——烽火戏诸侯《剑来》

Pod健康检查和服务可用性检查 健康检查的目的 探测的目的: 用来维持 pod的健壮性,当pod挂掉之后,deployment会生成新的pod,但如果pod是正常运行的,但pod里面出了问题,此时deployment是监测不到的。故此需要探测(probe)-pod是不是正常提供服务的

探针类似 Kubernetes 对 Pod 的健康状态可以通过两类探针来检查:LivenessProbe 和ReadinessProbe, kubelet定期执行这两类探针来诊断容器的健康状况。都是通过deployment实现的

探针类型

描述

LivenessProbe探针 用于判断容器是否存活(Running状态) ,如果LivenessProbe探针探测到容器不健康,则kubelet将杀掉该容器,并根据容器的重启策略做相应的处理。如果一个容器不包含LivenesspProbe探针,那么kubelet认为该容器的LivenessProbe探针返回的值永远是Success。

ReadinessProbe探针 用于判断容器服务是否可用(Ready状态) ,达到Ready状态的Pod才可以接收请求。对于被Service管理的Pod, Service与Pod Endpoint的关联关系也将基于Pod是否Ready进行设置。如果在运行过程中Ready状态变为False,则系统自动将其从Service的后端Endpoint列表中隔离出去,后续再把恢复到Ready状态的Pod加回后端Endpoint列表。这样就能保证客户端在访问Service时不会被转发到服务不可用的Pod实例上。

检测方式及参数配置 LivenessProbe和ReadinessProbe均可配置以下三种实现方式。

方式

描述

ExecAction 在容器内部执行一个命令,如果该命令的返回码为0,则表明容器健康。

TCPSocketAction 通过容器的IP地址和端口号执行TC检查,如果能够建立TCP连接,则表明容器健康。

HTTPGetAction 通过容器的IP地址、端口号及路径调用HTTP Get方法,如果响应的状态码大于等于200且小于400,则认为容器健康。

对于每种探测方式,需要设置initialDelaySeconds和timeoutSeconds等参数,它们的含义分别如下。

参数

描述

initialDelaySeconds: 启动容器后进行首次健康检查的等待时间,单位为s。

timeoutSeconds: 健康检查发送请求后等待响应的超时时间,单位为s。当超时发生时, kubelet会认为容器已经无法提供服务,将会重启该容器。

periodSeconds 执行探测的频率,默认是10秒,最小1秒。

successThreshold 探测失败后,最少连续探测成功多少次才被认定为成功,默认是1,对于liveness必须是1,最小值是1。

failureThreshold 当 Pod 启动了并且探测到失败,Kubernetes 的重试次数。存活探测情况下的放弃就意味着重新启动容器。就绪探测情况下的放弃 Pod 会被打上未就绪的标签。默认值是 3。最小值是 1

Kubernetes的ReadinessProbe机制可能无法满足某些复杂应用对容器内服务可用状态的判断

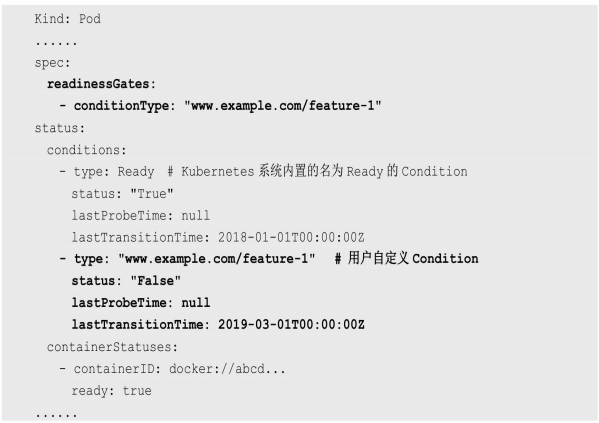

所以Kubernetes从1.11版本开始,引入PodReady++特性对Readiness探测机制进行扩展,在1.14版本时达到GA稳定版,称其为Pod Readiness Gates。

通过Pod Readiness Gates机制,用户可以将自定义的ReadinessProbe探测方式设置在Pod上,辅助Kubernetes设置Pod何时达到服务可用状态(Ready) 。为了使自定义的ReadinessProbe生效,用户需要提供一个外部的控制器(Controller)来设置相应的Condition状态。

Pod的Readiness Gates在Pod定义中的ReadinessGate字段进行设置。下面的例子设置了一个类型为www.example.com/feature-1的新ReadinessGate :

–

新增的自定义Condition的状态(status)将由用户自定义的外部控·制器设置,默认值为False. Kubernetes将在判断全部readinessGates条件都为True时,才设置Pod为服务可用状态(Ready为True) 。

这个不是太懂,需要以后再研究下

学习环境准备 1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$mkdir liveness-probe ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cd liveness-probe/ ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl create ns liveness-probe namespace/liveness-probe created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl config current-context kubernetes-admin@kubernetes ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl config set-context $(kubectl config current-context) --namespace=liveness-probe Context "kubernetes-admin@kubernetes" modified.

LivenessProbe探针 用于判断容器是否存活(Running状态) ,如果LivenessProbe探针探测到容器不健康,则kubelet将杀掉该容器,并根据容器的重启策略做相应的处理

ExecAction方式:command 在容器内部执行一个命令,如果该命令的返回码为0,则表明容器健康。

资源文件定义

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$cat liveness-probe.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-liveness name: pod-liveness spec: containers: - args: - /bin/sh - -c - touch /tmp/healthy; sleep 30 ; rm -rf /tmp/healthy; slee 10 livenessProbe: exec: command: - cat - /tmp/healthy initialDelaySeconds: 5 periodSeconds: 5 image: busybox imagePullPolicy: IfNotPresent name: pod-liveness resources: {} dnsPolicy: ClusterFirst restartPolicy: Always status: {}

运行这个deploy。当pod创建成功后,新建文件,并睡眠30s,删掉文件在睡眠。使用liveness检测文件的存在

1 2 3 4 5 6 7 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl apply -f liveness-probe.yaml pod/pod-liveness created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-liveness 1/1 Running 1 (8s ago) 41s

运行超过30s后。文件被删除,所以被健康检测命中,pod根据重启策略重启

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-liveness 1/1 Running 2 (34s ago) 99s

99s后已经从起了第二次

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible 192.168.26.83 -m shell -a "docker ps | grep pod-liveness" 192.168.26.83 | CHANGED | rc=0 >> 00f4182c014e 7138284460ff "/bin/sh -c 'touch /…" 6 seconds ago Up 5 seconds k8s_pod-liveness_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_0 01c5cfa02d8c registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 7 seconds ago Up 6 seconds k8s_POD_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_0 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-liveness 1/1 Running 0 25s ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-liveness 1/1 Running 1 (12s ago) 44s ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible 192.168.26.83 -m shell -a "docker ps | grep pod-liveness" 192.168.26.83 | CHANGED | rc=0 >> 1eafd7e8a12a 7138284460ff "/bin/sh -c 'touch /…" 15 seconds ago Up 14 seconds k8s_pod-liveness_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_1 01c5cfa02d8c registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 47 seconds ago Up 47 seconds k8s_POD_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_0 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

查看节点机docker中的容器ID,前后不一样,确定是POD被杀掉后重启。

HTTPGetAction的方式 通过容器的IP地址、端口号及路径调用HTTP Get方法,如果响应的状态码大于等于200且小于400,则认为容器健康。 创建资源文件,即相关参数使用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$cat liveness-probe-http.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-livenss-probe name: pod-livenss-probe spec: containers: - image: nginx imagePullPolicy: IfNotPresent name: pod-livenss-probe livenessProbe: failureThreshold: 3 httpGet: path: /index.html port: 80 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10 resources: {} dnsPolicy: ClusterFirst restartPolicy: Always status: {}

运行deploy,这个的探测机制访问Ngixn的默认欢迎页

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$vim liveness-probe-http.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl apply -f liveness-probe-http.yaml pod/pod-livenss-probe created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-livenss-probe 1/1 Running 0 15s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it pod-livenss-probe -- rm /usr/share/nginx/html/index.html ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-livenss-probe 1/1 Running 1 (1s ago) 2m31s

当欢迎页被删除时,访问报错,被检测命中,pod重启

TCPSocketAction方式 通过容器的IP地址和端口号执行TCP检查,如果能够建立TCP连接,则表明容器健康。 资源文件定义

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$cat liveness-probe-tcp.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-livenss-probe name: pod-livenss-probe spec: containers: - image: nginx imagePullPolicy: IfNotPresent name: pod-livenss-probe livenessProbe: failureThreshold: 3 tcpSocket: port: 8080 initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10 resources: {} dnsPolicy: ClusterFirst restartPolicy: Always status: {}

访问8080端口,但是8080端口未开放,所以访问会超时,不能建立连接,命中检测,重启Pod

1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl apply -f liveness-probe-tcp.yaml pod/pod-livenss-probe created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-livenss-probe 1/1 Running 0 8s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-livenss-probe 1/1 Running 1 (4s ago) 44s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$

ReadinessProbe探针 用于判断容器服务是否可用(Ready状态) ,达到Ready状态的Pod才可以接收请求。负责不能进行访问

ExecAction方式:command 资源文件定义,使用钩子建好需要检查的文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$cat readiness-probe.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-liveness name: pod-liveness spec: containers: - readinessProbe: exec: command: - cat - /tmp/healthy initialDelaySeconds: 5 periodSeconds: 5 image: nginx imagePullPolicy: IfNotPresent name: pod-liveness resources: {} lifecycle: postStart: exec: command: ["/bin/sh" , "-c" ,"touch /tmp/healthy" ] dnsPolicy: ClusterFirst restartPolicy: Always status: {}

创建3个有Ngixn的pod,通过POD创建一个SVC做测试用

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$sed 's/pod-liveness/pod-liveness-1/' readiness-probe.yaml | kubectl apply -f - pod/pod-liveness-1 created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$sed 's/pod-liveness/pod-liveness-2/' readiness-probe.yaml | kubectl apply -f - pod/pod-liveness-2 created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod-liveness 1/1 Running 0 3m1s 10.244.70.50 vms83.liruilongs.github.io <none> <none> pod-liveness-1 1/1 Running 0 2m 10.244.70.51 vms83.liruilongs.github.io <none> <none> pod-liveness-2 1/1 Running 0 111s 10.244.70.52 vms83.liruilongs.github.io <none> <none>

修改主页文字

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$serve =pod-liveness ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html" ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it $serve -- sh -c "cat /usr/share/nginx/html/index.html" pod-liveness ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$serve =pod-liveness-1 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html" ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$serve =pod-liveness-2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html" ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$

修改标签

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-liveness 1/1 Running 0 15m run=pod-liveness pod-liveness-1 1/1 Running 0 14m run=pod-liveness-1 pod-liveness-2 1/1 Running 0 14m run=pod-liveness-2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl edit pods pod-liveness-1 pod/pod-liveness-1 edited ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl edit pods pod-liveness-2 pod/pod-liveness-2 edited ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-liveness 1/1 Running 0 17m run=pod-liveness pod-liveness-1 1/1 Running 0 16m run=pod-liveness pod-liveness-2 1/1 Running 0 16m run=pod-liveness ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$

要删除文件检测

1 2 3 4 5 6 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it pod-liveness -- ls /tmp/ healthy ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it pod-liveness-1 -- ls /tmp/ healthy

使用POD创建SVC

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl expose --name=svc pod pod-liveness --port=80 service/svc exposed ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get ep NAME ENDPOINTS AGE svc 10.244.70.50:80,10.244.70.51:80,10.244.70.52:80 16s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc ClusterIP 10.104.246.121 <none> 80/TCP 36s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod-liveness 1/1 Running 0 24m 10.244.70.50 vms83.liruilongs.github.io <none> <none> pod-liveness-1 1/1 Running 0 23m 10.244.70.51 vms83.liruilongs.github.io <none> <none> pod-liveness-2 1/1 Running 0 23m 10.244.70.52 vms83.liruilongs.github.io <none> <none>

测试SVC正常,三个POD会正常 负载

1 2 3 4 5 6 7 8 9 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$while true ; do curl 10.104.246.121 ; sleep 1 > done pod-liveness pod-liveness-2 pod-liveness pod-liveness-1 pod-liveness-2 ^C

删除文件测试

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl exec -it pod-liveness -- rm -rf /tmp/ ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl exec -it pod-liveness -- ls /tmp/ ls: cannot access '/tmp/' : No such file or directory command terminated with exit code 2┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$while true ; do curl 10.104.246.121 ; sleep 1; done pod-liveness-2 pod-liveness-2 pod-liveness-2 pod-liveness-1 pod-liveness-2 pod-liveness-2 pod-liveness-1 ^C

会发现pod-liveness的pod已经不提供服务了

kubeadm 中的一些健康检测 kube-apiserver.yaml中的使用,两种探针同时使用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep -A 8 readi readinessProbe: failureThreshold: 3 httpGet: host: 192.168.26.81 path: /readyz port: 6443 scheme: HTTPS periodSeconds: 1 timeoutSeconds: 15 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep -A 9 liveness livenessProbe: failureThreshold: 8 httpGet: host: 192.168.26.81 path: /livez port: 6443 scheme: HTTPS initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 15 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$