很多时候我们放弃,以为不过是一段感情,到了最后,才知道,原来那是一生。——匪我思存《佳期如梦》

写在前面

学习K8s,整理记忆

博文内容涉及

K8s面板工具dashboard和Kuboard.

dashboard以及Kuboard部署Demo部分地方使用了Ansible,只用了shell,copy模块

部分内容参考

很多时候我们放弃,以为不过是一段感情,到了最后,才知道,原来那是一生。——匪我思存《佳期如梦》

一、环境准备 无论是dashboard还是Kuboard,为了在页面上显示系统资源的使用情况,需要部署K8s核心指标监控工具Metrics Server,所以我们先来安装metric-server

集群版本

1 2 3 4 5 6 7 8 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get nodes NAME STATUS ROLES AGE VERSION vms81.liruilongs.github.io Ready control-plane,master 68d v1.22.2 vms82.liruilongs.github.io Ready <none> 68d v1.22.2 vms83.liruilongs.github.io Ready <none> 68d v1.22.2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

安装 metric-server 相关镜像资源文件下载

1 curl -Ls https://api.github.com/repos/kubernetes-sigs/metrics-server/tarball/v0.3.6 -o metrics-server-v0.3.6.tar.gz

1 docker pull mirrorgooglecontainers/metrics-server-amd64:v0.3.6

两种方式任选其一,我们这里已经下载了镜像,所以直接导入,使用ansible所以机器执行

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible all -m copy -a "src=./metrics-img.tar dest=/root/metrics-img.tar" ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible all -m shell -a "docker load -i /root/metrics-img.tar" 192.168.26.83 | CHANGED | rc=0 >> Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6 192.168.26.81 | CHANGED | rc=0 >> Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6 192.168.26.82 | CHANGED | rc=0 >> Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

修改metrics-server-deployment.yaml,创建资源

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$mv kubernetes-sigs-metrics-server-d1f4f6f/ metrics ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cd metrics/ ┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics] └─$ls cmd deploy hack OWNERS README.md version code-of-conduct.md Gopkg.lock LICENSE OWNERS_ALIASES SECURITY_CONTACTS CONTRIBUTING.md Gopkg.toml Makefile pkg vendor ┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics] └─$cd deploy/1.8+/ ┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+] └─$ls aggregated-metrics-reader.yaml metrics-apiservice.yaml resource-reader.yaml auth-delegator.yaml metrics-server-deployment.yaml auth-reader.yaml metrics-server-service.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+] └─$vim metrics-server-deployment.yaml

修改资源文件,获取镜像方式

1 2 3 4 5 6 7 8 9 10 31 - name: metrics-server 32 image: k8s.gcr.io/metrics-server-amd64:v0.3.6 33 34 imagePullPolicy: IfNotPresent 35 command: 36 - /metrics-server 37 - --metric-resolution=30s 38 - --kubelet-insecure-tls 39 - --kubelet-preferred-address-types=InternalIP 40 volumeMounts:

部署 metrics-server

1 2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+] └─$kubectl apply -f .

确认是否成功安装kube-system空间

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+] └─$kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-78d6f96c7b-79xx4 1/1 Running 2 3h15m calico-node-ntm7v 1/1 Running 1 12h calico-node-skzjp 1/1 Running 4 12h calico-node-v7pj5 1/1 Running 1 12h coredns-545d6fc579-9h2z4 1/1 Running 2 3h15m coredns-545d6fc579-xgn8x 1/1 Running 2 3h16m etcd-vms81.liruilongs.github.io 1/1 Running 1 13h kube-apiserver-vms81.liruilongs.github.io 1/1 Running 2 13h kube-controller-manager-vms81.liruilongs.github.io 1/1 Running 4 13h kube-proxy-rbhgf 1/1 Running 1 13h kube-proxy-vm2sf 1/1 Running 1 13h kube-proxy-zzbh9 1/1 Running 1 13h kube-scheduler-vms81.liruilongs.github.io 1/1 Running 5 13h metrics-server-bcfb98c76-gttkh 1/1 Running 0 70m

简单测试

1 2 3 4 5 6 7 8 9 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+] └─$kubectl top nodes W1007 14:23:06.102605 102831 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% vms81.liruilongs.github.io 555m 27% 2025Mi 52% vms82.liruilongs.github.io 204m 10% 595Mi 15% vms83.liruilongs.github.io 214m 10% 553Mi 14% ┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+] └─$

二、dashboard安装 简单介绍 kubernetes-dashboard 是 Kubernetes的Web UI网页管理工具,可提供部署应用、资源对象管理、容器日志查询、系统监控等常用的集群管理功能。为了在页面上显示系统资源的使用情况,需要部署K8s核心指标监控工具Metrics Server

Github主页: https://github.com/kubernetes/dashboard

安装步骤 资源文件(需要科学上网):https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.0/aio/deploy/recommended.yaml

这里如果可以科学上网的小伙伴可以用这个,如果不行的话,用我的那个,yaml文件太大了,我放到了文末。

环境准备,工作节点push相关的镜像,这里因为有些镜像push不下来,所以替换为可以访问的镜像仓库来处理。

1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cat recommended.yaml | grep -i image image: registry.cn-hangzhou.aliyuncs.com/kube-iamges/dashboard:v2.0.0-beta8 imagePullPolicy: IfNotPresent image: registry.cn-hangzhou.aliyuncs.com/kube-iamges/metrics-scraper:v1.0.1 imagePullPolicy: IfNotPresent ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible node -m shell -a "docker pull registry.cn-hangzhou.aliyuncs.com/kube-iamges/dashboard:v2.0.0-beta8" ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible node -m shell -a "docker pull registry.cn-hangzhou.aliyuncs.com/kube-iamges/metrics-scraper:v1.0.1"

安装 dashboard

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl apply -f recommended.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

安装完成,查看相关的资源是否准备好.

1 2 3 4 5 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get pods -n kubernetes-dashboard NAME READY STATUS RESTARTS AGE dashboard-metrics-scraper-669c88c9d9-c6jc7 1/1 Running 0 119s kubernetes-dashboard-5d66bcd8fd-87hlx 1/1 Running 0 2m

1 2 3 4 5 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get svc -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dashboard-metrics-scraper ClusterIP 10.103.114.121 <none> 8000/TCP 2m11s kubernetes-dashboard ClusterIP 10.98.100.249 <none> 443/TCP 2m12s

1 2 3 4 5 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get sa -n kubernetes-dashboard NAME SECRETS AGE default 1 2m21s kubernetes-dashboard 1 2m21s

1 2 3 4 5 6 7 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get deploy -n kubernetes-dashboard NAME READY UP-TO-DATE AVAILABLE AGE dashboard-metrics-scraper 1/1 1 1 2m50s kubernetes-dashboard 1/1 1 1 2m51s ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

修改SVC类型为NodePort,允许机器外部提供访问能力

1 2 3 4 5 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard service/kubernetes-dashboard edited ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

查看修改是否正确

1 2 3 4 5 6 7 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get svc -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dashboard-metrics-scraper ClusterIP 10.103.114.121 <none> 8000/TCP 6m33s kubernetes-dashboard NodePort 10.98.100.249 <none> 443:32329/TCP 6m34s ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

这里切换了一下命名空间,不是必要操作

1 2 3 4 5 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl config set-context $(kubectl config current-context) --namespace=kubernetes-dashboard Context "kubernetes-admin@kubernetes" modified. ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

创建sa,为其绑定一个类似root的K8s角色,提供访问能力。更多可以参考:https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

资源文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cat dashboard-adminuser.yaml apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl apply -f dashboard-adminuser.yaml serviceaccount/admin-user created clusterrolebinding.rbac.authorization.k8s.io/admin-user unchanged

获取sa的token,通过token的方式登录部署好的dashboard

1 2 3 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}" ) -o go-template="{{.data.token | base64decode}}" eyJhbGciOiJSUzI1NiIsImtpZCI6ImF2MmJVZ3d6M21JRC1BZUwwaHlDdzZHSGNyaVJON1BkUHF6MlhPV2NfX00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXF3bWdtIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI1MWE0ZTU5Ni00OThiLTRhOGMtOTBjOC00YTExZGYxZDk3NzYiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.epjeFx7jvYG6v0zf0RuMjpY7RisrzBxrNdYdfszCwXS2_AauHM9a6dLUSx1oLUimiUdbCZvX0tElC99u8f5YQS4xGL-8gNSIUpe3JvWjgTlYB-6I5BqRxKrckqkHrs0juzw0K2d4HdDwUe79AyS7pJwqrD4LTQKzAfOmpWbwzHbPI4WKJ7FKyYGcW76HOdTYTdXVb_Rr0ucdOIRQdEwbFceT9atiImqQhb1Kv9ByoFDxSx2YP6PXPo8zGMUwmXXtlimzv0IdghcPOrwe6gk96LoD3pV-Q2kGL3OPhnxVusfOJh-bdRznSGorvtXc_IGJh8gwhF1zluRmQ4tECCu1sw

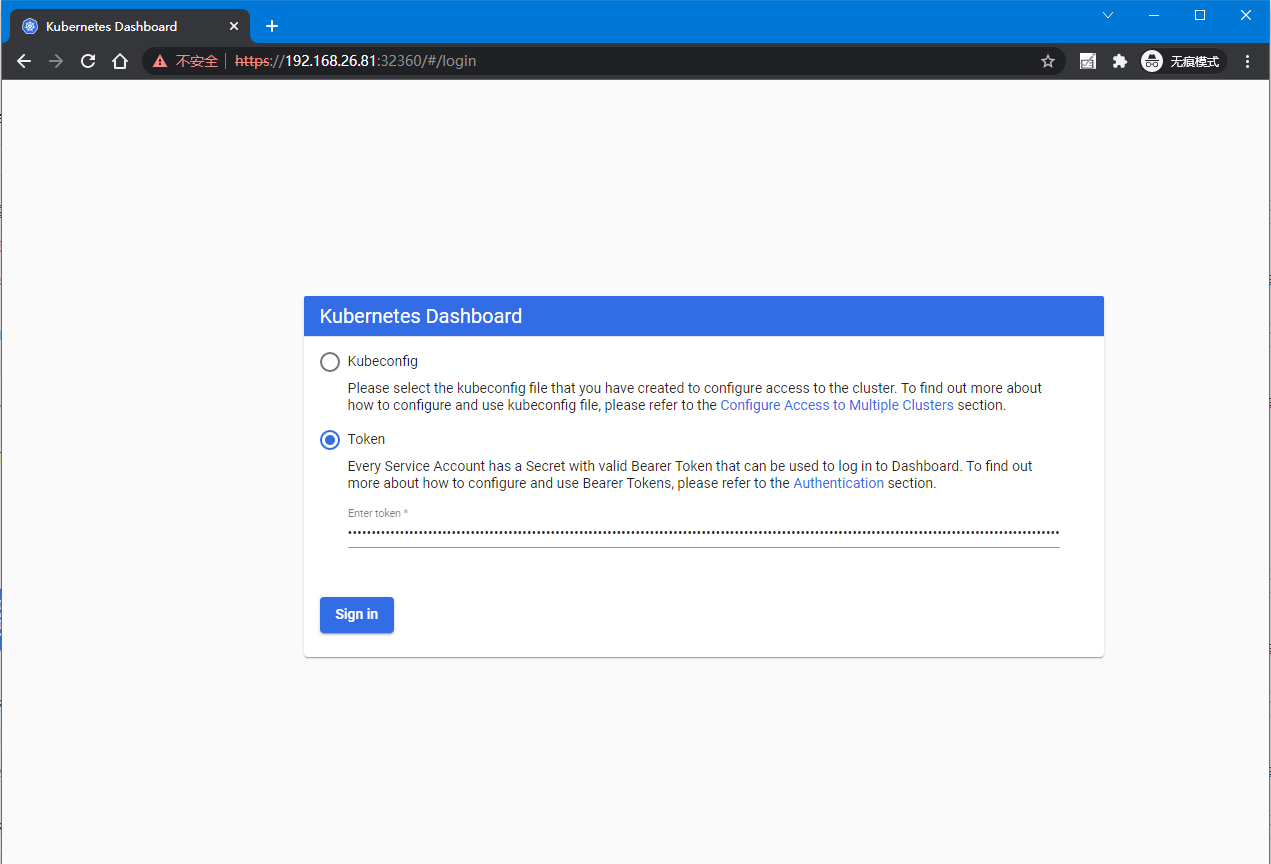

访问测试

访问步骤

如果用新版的谷歌浏览器会提示这个报错,解决办法为,键盘直接输入thisisunsafe就可以了

第一次访问会有如下页面

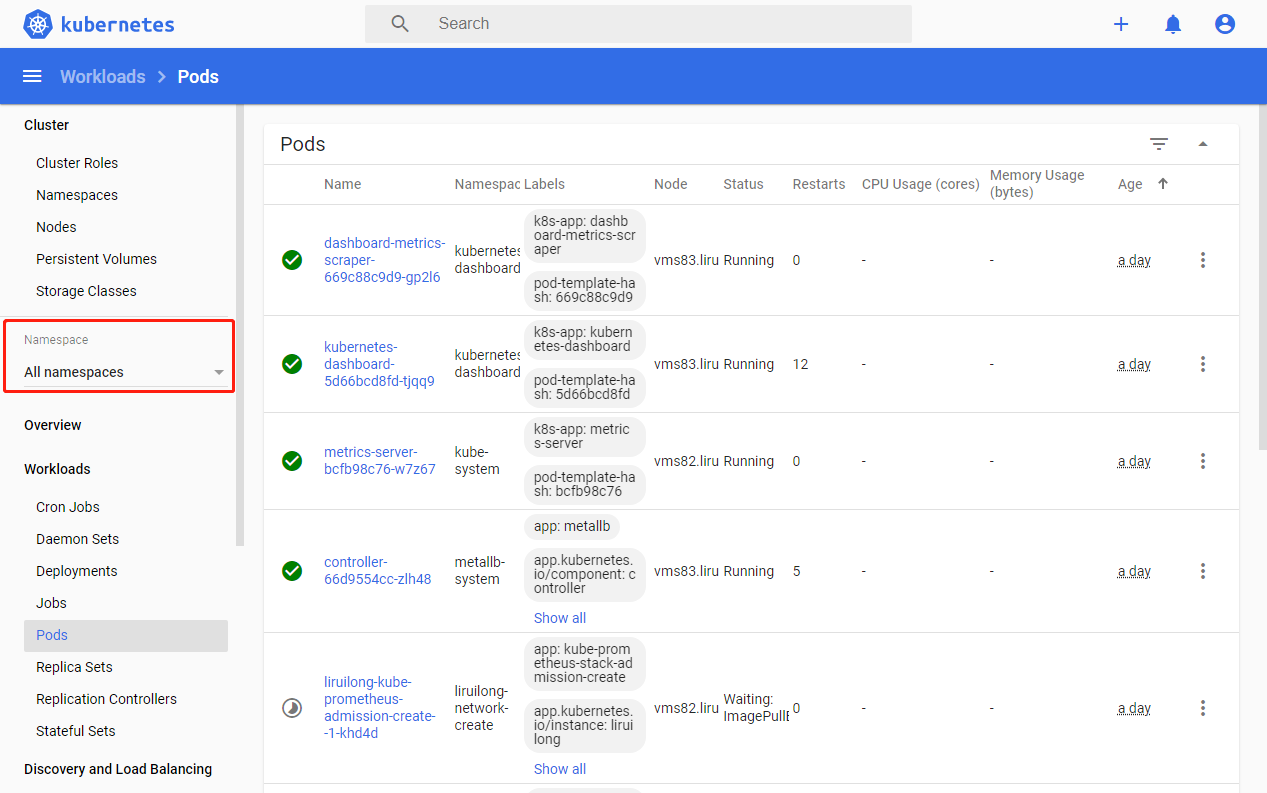

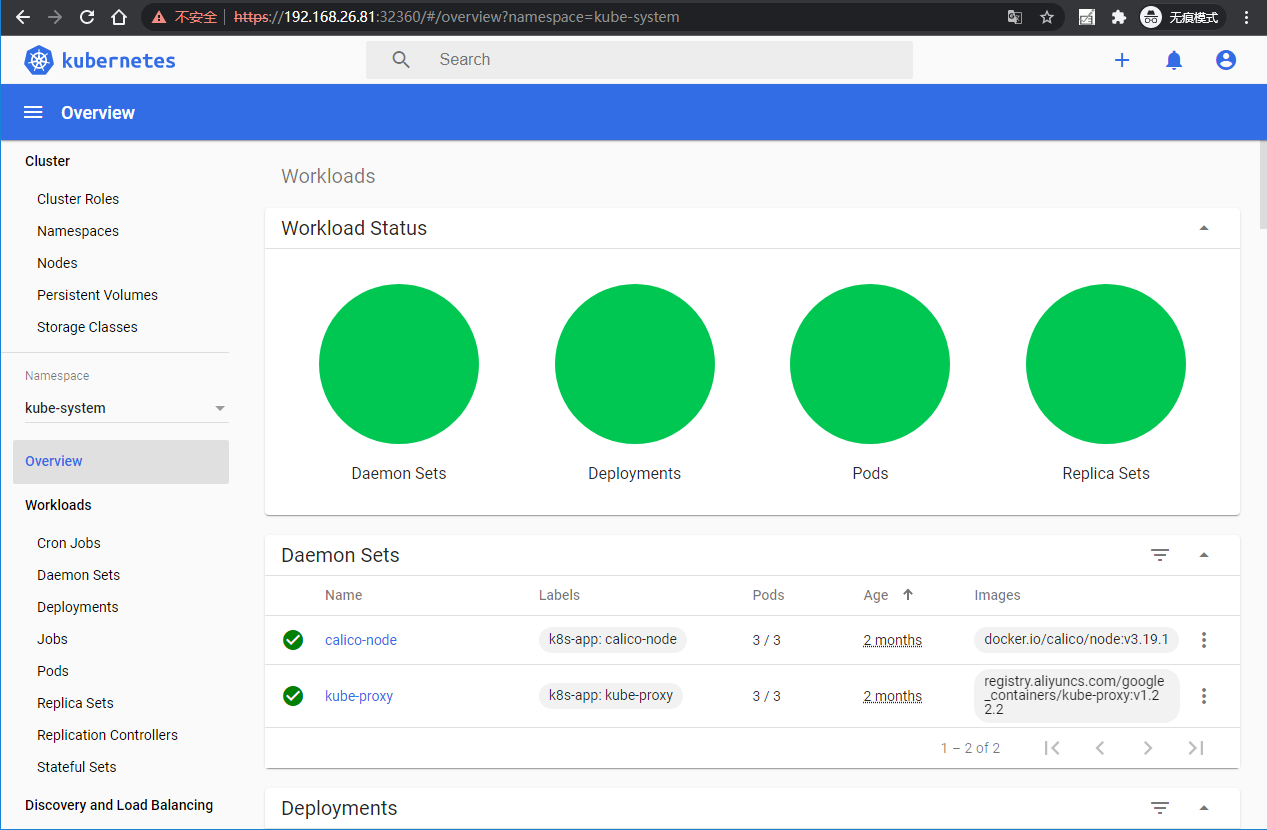

选择所有命名空间就可以查看相关信息

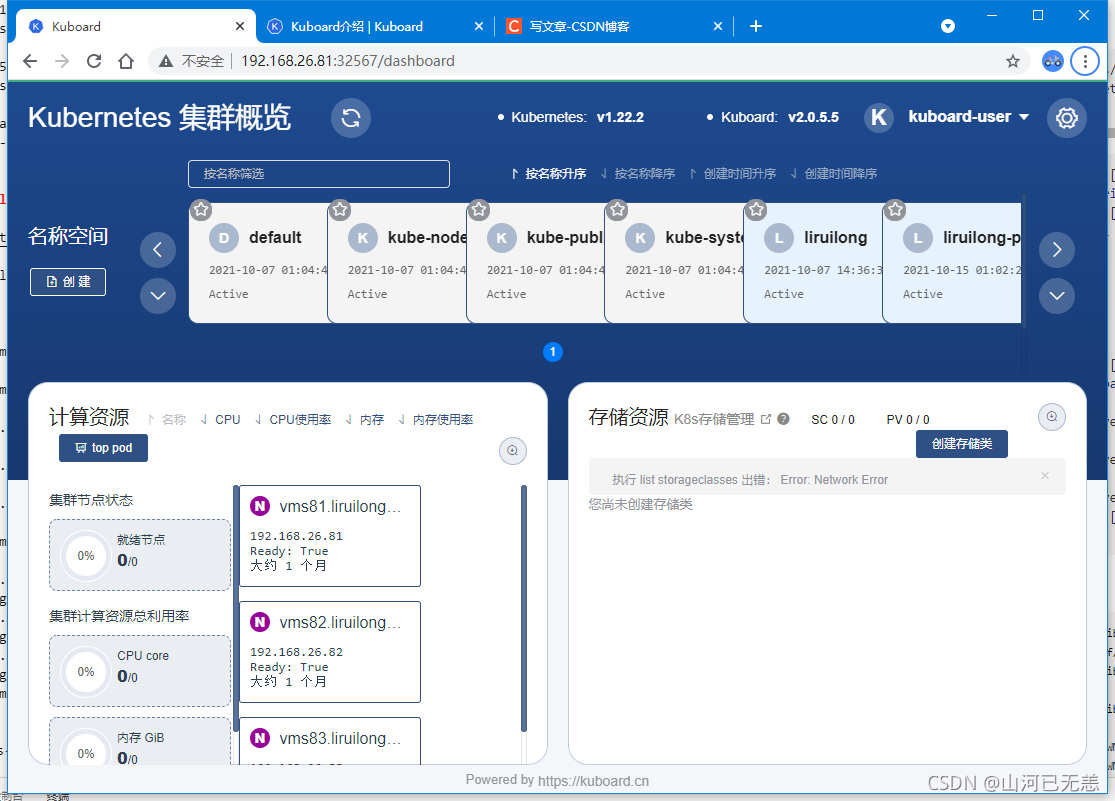

三、kuboard 简单介绍 Kuboard,是一款免费的 Kubernetes 图形化管理工具,Kuboard 力图帮助用户快速在 Kubernetes 上落地微服务..

官网: http://press.demo.kuboard.cn/overview/share-coder.html

安装步骤 下载资源yml文件

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$wget https://kuboard.cn/install-script/kuboard.yaml --2021-11-12 20:00:01-- https://kuboard.cn/install-script/kuboard.yaml Resolving kuboard.cn (kuboard.cn)... 122.112.240.69, 119.3.92.138 Connecting to kuboard.cn (kuboard.cn)|122.112.240.69|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 2318 (2.3K) [application/octet-stream] Saving to: ‘kuboard.yaml’ 100%[============================================================>] 2,318 --.-K/s in 0s 2021-11-12 20:00:04 (58.5 MB/s) - ‘kuboard.yaml’ saved [2318/2318]

所有节点预先拉取下载镜像 docker pull eipwork/kuboard:latest

1 2 3 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible all -m shell -a "docker pull eipwork/kuboard:latest"

修改 kuboard.yaml 把策略改为 IfNotPresent

1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cat kuboard.yaml | grep imagePullPolicy imagePullPolicy: Always ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cat kuboard.yaml | grep Always imagePullPolicy: Always ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$sed -i s ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cat kuboard.yaml | grep imagePullPolicy imagePullPolicy: IfNotPresent ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

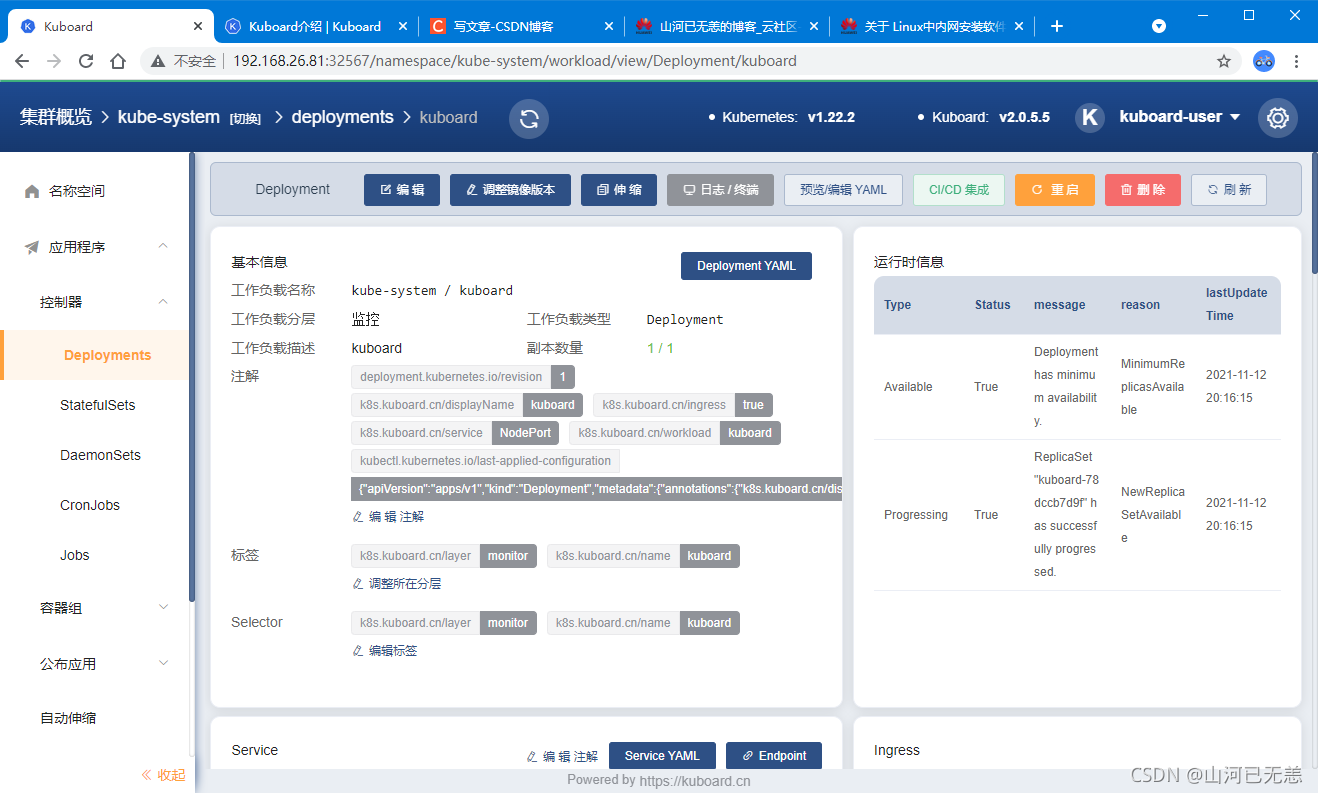

.创建资源对象 kubectl apply -f kuboard.yaml

1 2 3 4 5 6 7 8 9 10 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl apply -f kuboard.yaml deployment.apps/kuboard created service/kuboard created serviceaccount/kuboard-user created clusterrolebinding.rbac.authorization.k8s.io/kuboard-user created serviceaccount/kuboard-viewer created clusterrolebinding.rbac.authorization.k8s.io/kuboard-viewer created ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

确保 kuboard 运行 kubectl get pods -n kube-system

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-78d6f96c7b-csdd6 1/1 Running 240 (4m56s ago) 17d calico-node-ntm7v 1/1 Running 145 (8m22s ago) 36d calico-node-skzjp 0/1 CrashLoopBackOff 753 (4m30s ago) 36d calico-node-v7pj5 1/1 Running 169 (4m59s ago) 36d coredns-7f6cbbb7b8-2msxl 1/1 Running 4 17d coredns-7f6cbbb7b8-ktm2d 1/1 Running 5 (20h ago) 17d etcd-vms81.liruilongs.github.io 1/1 Running 7 (7d11h ago) 24d kube-apiserver-vms81.liruilongs.github.io 1/1 Running 15 (20h ago) 24d kube-controller-manager-vms81.liruilongs.github.io 1/1 Running 56 (108m ago) 24d kube-proxy-nzm24 1/1 Running 3 (11h ago) 23d kube-proxy-p2zln 1/1 Running 3 (14d ago) 24d kube-proxy-pqhqn 1/1 Running 7 (7d11h ago) 24d kube-scheduler-vms81.liruilongs.github.io 1/1 Running 60 (108m ago) 24d kuboard-78dccb7d9f-rsnrp 1/1 Running 0 49s metrics-server-bcfb98c76-76pg5 1/1 Running 0 20h ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl config set

获取 token echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d)

1 2 3 4 5 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}' ) -o go-template='{{.data.token}}' | base64 -d) eyJhbGciOiJSUzI1NiIsImtpZCI6IkZ1NHI1RkhSemVhN2s1OWthS1ZEQ0dueDRmS2RkMDdyR0FZYklkaWFnbmsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJvYXJkLXVzZXItdG9rZW4tYmY4bjgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoia3Vib2FyZC11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMzQ4YWYyNTQtZDI5NS00Yjc4LTg3ZWItNmE0ZDFkMjFkZmU4Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmt1Ym9hcmQtdXNlciJ9.Nzjerrlpw6XcBRkqXPQzDlSmMZrDf89yuVjXkL7vV1nhgWXX0iqZsqF8DPiy7Sjj-2JFYPD_zojgqV0sgOlKV_7Ou6p3F7K6lhu4VI9CGkM8OJxFdPIh-ETKVnIlb7l9s1jN4hvhBWck8geOIx4pnOawUU3jbOH7TQKz43bTnvUx_FACvnxG9gVU6KyQm6GVzs28SDs1YrqpMFWZgnJ_vCAe-KfUrqYChLecIHXM-vuB4JODxrwB4n3z2GtsJdigTIpd_FjeDs9Bl7v3CoWrozMa73rxPZyO58fo8D1bi1XTbJNeRjTjYnQc0-GvSoupQaNAfYloD1pwimmcFnIKxQ ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

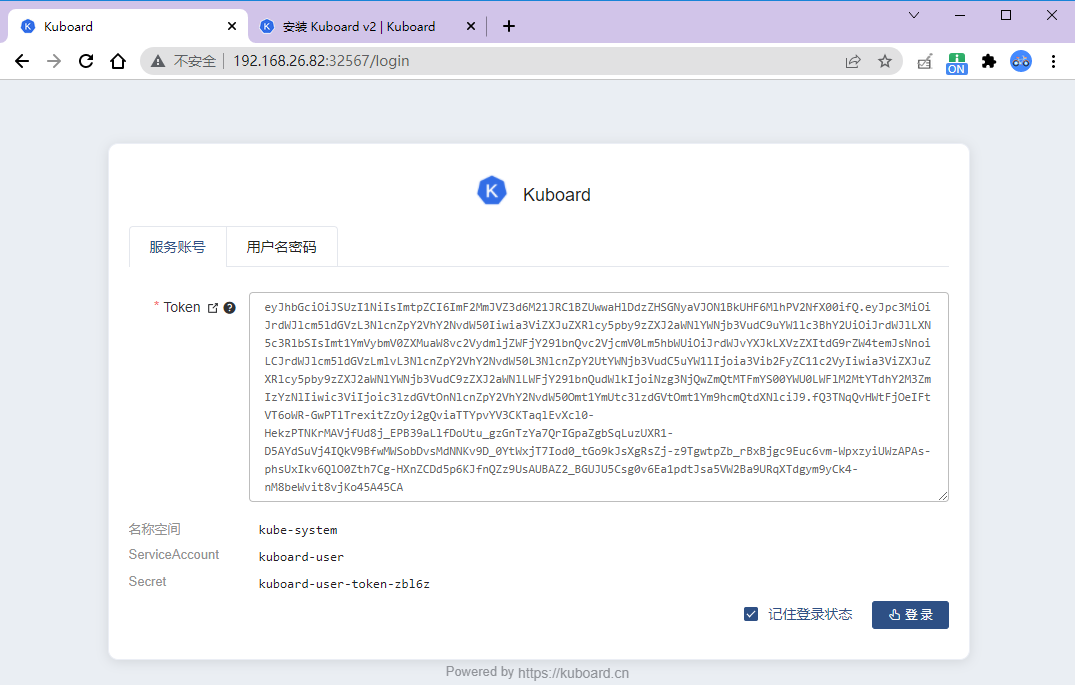

访问测试 .登录 http://192.168.26.81:32567

1 2 3 4 5 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get svc -A | grep kuboard kube-system kuboard NodePort 10.96.142.159 <none> 80:32567/TCP 51s ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

用上面命令获取的 token 登录:

recommended.yaml 资源文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 apiVersion: v1 kind: Namespace metadata: name: kubernetes-dashboard --- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: ports: - port: 443 targetPort: 8443 selector: k8s-app: kubernetes-dashboard --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kubernetes-dashboard type: Opaque --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-csrf namespace: kubernetes-dashboard type: Opaque data: csrf: "" --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-key-holder namespace: kubernetes-dashboard type: Opaque --- kind: ConfigMap apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-settings namespace: kubernetes-dashboard --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard rules: - apiGroups: ["" ] resources: ["secrets" ] resourceNames: ["kubernetes-dashboard-key-holder" , "kubernetes-dashboard-certs" , "kubernetes-dashboard-csrf" ] verbs: ["get" , "update" , "delete" ] - apiGroups: ["" ] resources: ["configmaps" ] resourceNames: ["kubernetes-dashboard-settings" ] verbs: ["get" , "update" ] - apiGroups: ["" ] resources: ["services" ] resourceNames: ["heapster" , "dashboard-metrics-scraper" ] verbs: ["proxy" ] - apiGroups: ["" ] resources: ["services/proxy" ] resourceNames: ["heapster" , "http:heapster:" , "https:heapster:" , "dashboard-metrics-scraper" , "http:dashboard-metrics-scraper" ] verbs: ["get" ] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules: - apiGroups: ["metrics.k8s.io" ] resources: ["pods" , "nodes" ] verbs: ["get" , "list" , "watch" ] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: registry.cn-hangzhou.aliyuncs.com/kube-iamges/dashboard:v2.0.0-beta8 imagePullPolicy: IfNotPresent ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates - --namespace=kubernetes-dashboard volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: ports: - port: 8000 targetPort: 8000 selector: k8s-app: dashboard-metrics-scraper --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: dashboard-metrics-scraper template: metadata: labels: k8s-app: dashboard-metrics-scraper annotations: seccompProfile: 'runtime/default' spec: containers: - name: dashboard-metrics-scraper image: registry.cn-hangzhou.aliyuncs.com/kube-iamges/metrics-scraper:v1.0.1 imagePullPolicy: IfNotPresent ports: - containerPort: 8000 protocol: TCP livenessProbe: httpGet: scheme: HTTP path: / port: 8000 initialDelaySeconds: 30 timeoutSeconds: 30 volumeMounts: - mountPath: /tmp name: tmp-volume securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule volumes: - name: tmp-volume emptyDir: {}