真正的坚持归于平静,靠的是温和的发力,而不是时时刻刻的刺激。

写在前面 真正的坚持归于平静,靠的是温和的发力,而不是时时刻刻的刺激。

学习环境

1 2 3 4 5 6 7 8 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get nodes NAME STATUS ROLES AGE VERSION vms81.liruilongs.github.io Ready control-plane,master 134d v1.22.2 vms82.liruilongs.github.io Ready <none> 134d v1.22.2 vms83.liruilongs.github.io NotReady <none> 134d v1.22.2 ┌──[root@vms81.liruilongs.github.io]-[~] └─$

Service Account 是什么? 学习Service Account之前,我们简单介绍下K8s的安全体系,K8s中通过一系列机制来实现集群的安全控制,其中包括API Server的认证和授(鉴)权,关于认证和授(鉴)权,感兴趣小伙伴可以看看之前的博文,我们这里简单介绍下

关于授(鉴)权,现在用的比较多的是RBAC(Role-Based Access Control,基于角色的访问控制)的方式

RBAC在Kubernetes的1.5版本中引入,在1.6版本时升级为Beta版本,在1.8版本时升级为GA。现在作为kubeadm安装方式的默认选项,相对于其他访问控制方式,RBAC对集群中的资源和非资源权限均有完整的覆盖。整个RBAC完全由(Role,ClusterRole,RoleBinding,ClusterRoleBinding)API对象完成,同其他API对象一样,可以用kubectl或API进行操作。可以在运行时进行调整,无须重新启动 API Server。

K8s的授权策略设置通过通过API Server的启动参数”--authorization-mode“设置。 除了RBAC外,授权策略还包括:

策略

描述

ABAC

(Attribute-Based Access Control)基于属性的访问控制,表示使用用户配置的授权规则对用户请求进行匹配和控制。

Webhook

通过调用外部REST服务对用户进行授权。

Node

是一种专用模式,用于对kubelet发出的请求进行访问控制。

关于认证机制,在K8s的认证中,如果按照集群内外认证分的话,分为集群外认证和集群内认证:

集群外认证一般三种,也可以理解为通过kubectl或者编程语言编写的客户端API访问:

HTTP Token认证:通过一个Token来识别合法用户。HTTPS 证书认证:基于CA根证书签名的双向数字证书认证方式(Kubeconfig文件)HTTP Base认证:通过用户名+密码的方式认证(用户账户),这个只有1.19之前的版本适用,之后的版本不在支持

集群内的认证也就是我们今天要讲的:Service Account对象,也叫服务账户

所以说Service Account它并不是给Kubernetes集群的用户(系统管理员、运维人员)用的,而是给运行在K8s上的Pod里的进程用的,为Pod里的进程提供认证。

比如我们要编写一个类似kubectl一样的K8s的管理工具,比如一些面板工具(kubernetes-dashboard),而且这个工具是运行在我们的K8s环境里的,那么这个时候,我们如何给这个工具访问集群做认证授权,就要用到Service Account,简写为sa,所以我们一般直接叫sa

当我们创建任何一个Pod的时候,必须要有sa,否则创建失败,如果没有显示的指定对应的sa,即服务账户,Pod会默认使用当前的命令空间的default服务账户(每个命名空间都有一个名为 default 的sa资源。)

这里要说明的是每个sa服务账户都会生成一个secret,这个secret里面包含一个token凭证。所以说sa实际认证是通过token实现的认证。(token)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get sa default NAME SECRETS AGE default 1 67d ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl run podcommon --image=nginx --image-pull-policy=IfNotPresent --labels="name=liruilong" --env="name=liruilong" pod/podcommon created ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get pods podcommon -o yaml | grep serviceAccount serviceAccount: default serviceAccountName: default - serviceAccountToken: ┌──[root@vms81.liruilongs.github.io]-[~] └─$

可以使用自动挂载给Pod的default服务账户 token访问 API,但是前提是需要给default授权,对于RBAC的方式来讲,需要给角色授权,然后绑定角色。

在 1.6 以上版本中,可以通过在sa上设置automountServiceAccountToken: false来实现不给服务账号自动挂载 API token:

1 2 3 4 5 6 apiVersion: v1 kind: ServiceAccount metadata: name: build-robot automountServiceAccountToken: false ...

在 1.6 以上版本中,你也可以选择不给特定 Pod 自动挂载 API token:

1 2 3 4 5 6 7 8 apiVersion: v1 kind: Pod metadata: name: my-pod spec: serviceAccountName: build-robot automountServiceAccountToken: false ...

如果 Pod 和服务账户都指定了automountServiceAccountToken值,则 Pod 的 spec 优先于服务帐户。

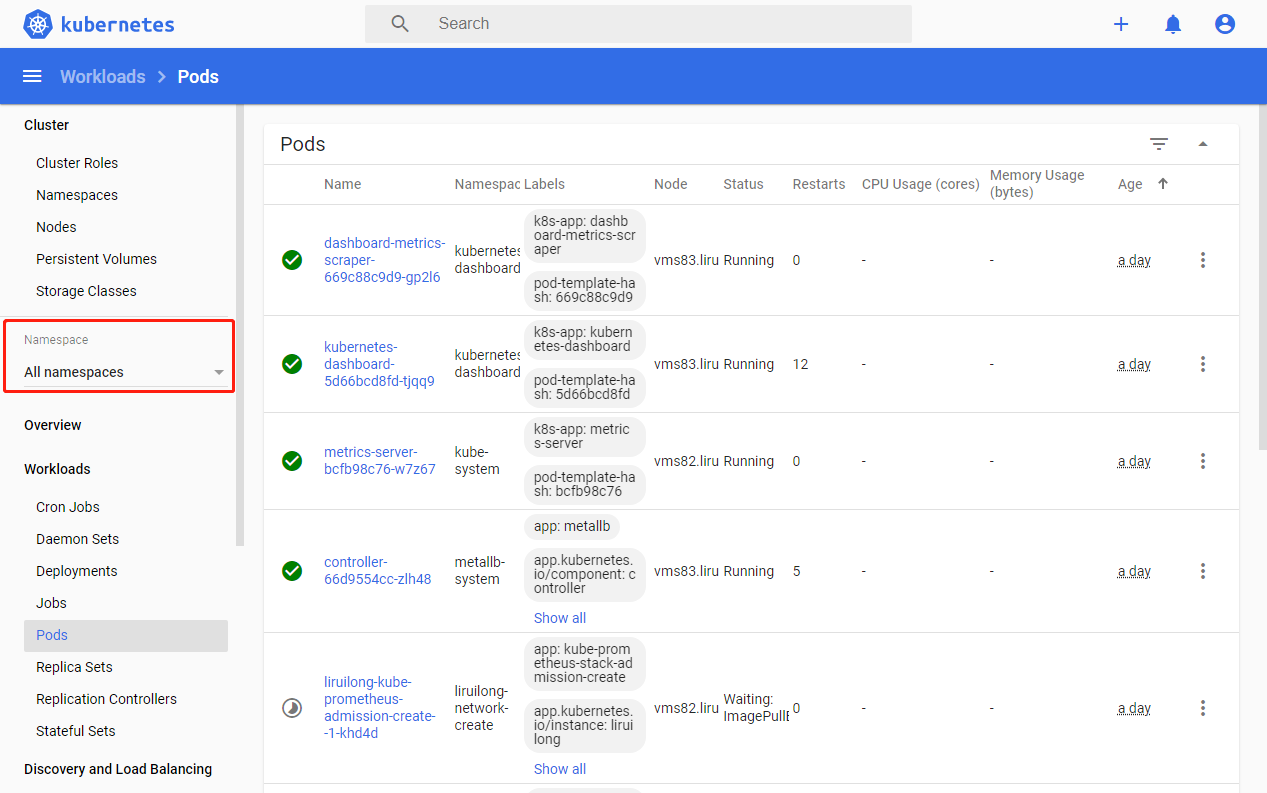

下面看一下kubernetes-dashboard对sa的应用,下面是一个已经部署好的dashboard

关于kubernetes-dashboard是K8s官网提供的Kubernetes的Web UI网页管理工具,可提供部署应用、资源对象管理、容器日志查询、系统监控等常用的集群管理功能。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES dashboard-metrics-scraper-669c88c9d9-2qp62 1/1 Running 8 (7d11h ago) 61d 10.244.88.83 vms81.liruilongs.github.io <none> <none> kubernetes-dashboard-5d66bcd8fd-l22jm 1/1 Running 13 (7d3h ago) 61d 10.244.88.80 vms81.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~] └─$ ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get svc -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dashboard-metrics-scraper ClusterIP 10.109.92.159 <none> 8000/TCP 67d kubernetes-dashboard NodePort 10.106.48.37 <none> 443:32360/TCP 67d ┌──[root@vms81.liruilongs.github.io]-[~] └─$

上面是一个我们之前部署好的面板工具,在部署的过程中,我们要主动创建一个sa(kubernetes-dashboard),并且为这个sa授权,而后,我们的这个面板工具才具有管理K8s集群的能力

创建sa的资源文件

1 2 3 4 5 6 7 apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard

查看kubernetes-dashboard sa,可以看到对应的token

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get sa NAME SECRETS AGE ...... kubernetes-dashboard 1 67d ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get secrets NAME TYPE DATA AGE ......... kubernetes-dashboard-token-wnqqg kubernetes.io/service-account-token 3 67d ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl describe secrets kubernetes-dashboard-token-wnqqg Name: kubernetes-dashboard-token-wnqqg Namespace: kubernetes-dashboard Labels: <none> Annotations: kubernetes.io/service-account.name: kubernetes-dashboard kubernetes.io/service-account.uid: 8e209de5-14a0-4dd5-bd19-2264170531f5 Type: kubernetes.io/service-account-token Data ==== token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImF2MmJVZ3d6M21JRC1BZUwwaHlDdzZHSGNyaVJON1BkUHF6MlhPV2NfX00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdW.... ca.crt: 1099 bytes namespace: 20 bytes ┌──[root@vms81.liruilongs.github.io]-[~] └─$

然后对sa授权,一般通过RBAC的方式.创建角色

1 2 3 4 5 6 7 8 9 10 11 kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules: - apiGroups: ["metrics.k8s.io" ] resources: ["pods" , "nodes" ] verbs: ["get" , "list" , "watch" ]

然后绑定角色到sa

1 2 3 4 5 6 7 8 9 10 11 12 13 apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard

然后pod通过serviceAccount和serviceAccountName来绑定sa,当然这两个参数指定一个就可以了。

1 2 3 4 5 6 7 8 9 10 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get pod kubernetes-dashboard-5d66bcd8fd-l22jm -o yaml | grep -C 5 serviceAccount preemptionPolicy: PreemptLowerPriority priority: 0 restartPolicy: Always schedulerName: default-scheduler securityContext: {} serviceAccount: kubernetes-dashboard serviceAccountName: kubernetes-dashboard terminationGracePeriodSeconds: 30

通过yaml文件我们可以看到,值sa为kubernetes-dashboard,当然在资源文件中,是在Deployment和Servcie中指定,

如果sa的automountServiceAccountToken或Pod的automountServiceAccountToken都未显式设置为 false,那么会为对应的 Pod 创建一个 volume,在其中包含用来访问 API 的令牌。

如果为sa对应的token创建了卷,则为 Pod 中的每个容器添加一个 volumeSource,挂载在其 /var/run/secrets/kubernetes.io/serviceaccount 目录下。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get pod kubernetes-dashboard-5d66bcd8fd-l22jm -o yaml | grep -C 20 -i serviceAccount ........... volumeMounts: ........... - mountPath: /var/run/secrets/kubernetes.io/serviceaccount name: kube-api-access-8jlj7 readOnly: true ......... serviceAccount: kubernetes-dashboard serviceAccountName: kubernetes-dashboard ......... volumes: .......... - name: kube-api-access-8jlj7 projected: defaultMode: 420 sources: - serviceAccountToken: expirationSeconds: 3607 path: token ........ ┌──[root@vms81.liruilongs.github.io]-[~] └─$

通过配置文件可以看到,token通过卷的方式挂载到了容器里的/var/run/secrets/kubernetes.io/serviceaccount 目录,但是需要注意的是,这个token和sa对应的token在1.20版本之后进行了处理,不一样,在之前的版本中是一样的。

Service Account Demo 创建一个sa

1 2 3 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl create sa sa-demo serviceaccount/sa-demo created

查看对应的secret和token

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get secrets sa-demo-token-pdrs8 NAME TYPE DATA AGE sa-demo-token-pdrs8 kubernetes.io/service-account-token 3 43s ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl describe secrets sa-demo-token-pdrs8 Name: sa-demo-token-pdrs8 Namespace: kubernetes-dashboard Labels: <none> Annotations: kubernetes.io/service-account.name: sa-demo kubernetes.io/service-account.uid: 7003de88-803a-4dae-a6e3-d647d0517c92 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1099 bytes namespace: 20 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImF2MmJVZ3d6M21JRC1BZUwwaHlDdzZHSGNyaVJON1BkUHF6MlhPV2NfX00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJzYS1kZW1vLXRva2VuLXBkcnM4Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6InNhLWRlbW8iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI3MDAzZGU4OC04MDNhLTRkYWUtYTZlMy1kNjQ3ZDA1MTdjOTIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6c2EtZGVtbyJ9.sVAmtpfqFREjUCd9bkQvMuHpasXOcKYLJvVsJLLe6ufP4zs8ZVt6HqH4ylsxbmwtibNXBV9hVNEU_2X3T2enOjOSYuiyaEP4BifDQN7DmZbu2uXQCBglixaNB7ZIIPX_oQsW0ndBNonVqMSMm-ZItYDzLo-QTOxTxc5OQZ3zSBJqITAvWFlshWA7mKntNmWw6m5KunjhYZs14Lpa-NhknYS9G6ur8SKY4XdE44hzQhD7h4y01ZezZGR3IdGd3HktA5dWYTRXXr9H00odey2YtGfj40Vql3rMrdMPJOFbAozjyaWxhmSpjHVGcbXawai8znKPCdGlW4l2aRmbghovsw ┌──[root@vms81.liruilongs.github.io]-[~] └─$

编写pod资源文件,指定sa为刚才创建的sa

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl run pod-sa --image=nginx --image-pull-policy=IfNotPresent --dry-run=client -o yaml > pod-sa.yaml ┌──[root@vms81.liruilongs.github.io]-[~] └─$vim pod-sa.yaml ┌──[root@vms81.liruilongs.github.io]-[~] └─$cat pod-sa.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-demo name: pod-demo spec: serviceAccount: sa-demo containers: - image: nginx imagePullPolicy: IfNotPresent name: pod-demo resources: {} dnsPolicy: ClusterFirst restartPolicy: Always status: {} ┌──[root@vms81.liruilongs.github.io]-[~] └─$

查看创建的pod

1 2 3 4 5 6 7 8 9 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl apply -f pod-sa.yaml pod/pod-demo created ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get pods pod-demo NAME READY STATUS RESTARTS AGE pod-demo 1/1 Running 0 95s ┌──[root@vms81.liruilongs.github.io]-[~] └─$

对于deplay的sa修改可以直接通过set的方式设置,时间关系这里不多讲啦,文末的资源文件中有demo

下面我们来看一道Service Account相关习题,这是某一期CKA认证的一道考题

创建一个名为deployment-clusterrole且仅允许创建以下资源类型的新ClusterRole:

Deployment

StatefulSet

DaemonSet

题目很简单,一般的生产我们也会涉及,指定权限创建一个集群角色,然后把这个集群角色绑定到一个新建的sa上。

1 2 3 4 5 6 kubectl create clusterrole deployment-clusterrole --verb=create --resource=deployments,statefulsets,daemonsets kubectl -n app-team create serviceaccount cicd-token kubectl -n app-team create rolebinding cicd-token-rolebinding --clusterrole=deployment-clusterrole --serviceaccount=app-team1:cicd-token

对应 sa 学习,感觉 kubernetes-dashboard 的是一个很好的 Demo。这里把面板的资源文件贴出来,感兴趣小伙伴可以研究下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 apiVersion: v1 kind: Namespace metadata: name: kubernetes-dashboard --- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: ports: - port: 443 targetPort: 8443 selector: k8s-app: kubernetes-dashboard --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kubernetes-dashboard type: Opaque --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-csrf namespace: kubernetes-dashboard type: Opaque data: csrf: "" --- apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-key-holder namespace: kubernetes-dashboard type: Opaque --- kind: ConfigMap apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-settings namespace: kubernetes-dashboard --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard rules: - apiGroups: ["" ] resources: ["secrets" ] resourceNames: ["kubernetes-dashboard-key-holder" , "kubernetes-dashboard-certs" , "kubernetes-dashboard-csrf" ] verbs: ["get" , "update" , "delete" ] - apiGroups: ["" ] resources: ["configmaps" ] resourceNames: ["kubernetes-dashboard-settings" ] verbs: ["get" , "update" ] - apiGroups: ["" ] resources: ["services" ] resourceNames: ["heapster" , "dashboard-metrics-scraper" ] verbs: ["proxy" ] - apiGroups: ["" ] resources: ["services/proxy" ] resourceNames: ["heapster" , "http:heapster:" , "https:heapster:" , "dashboard-metrics-scraper" , "http:dashboard-metrics-scraper" ] verbs: ["get" ] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard rules: - apiGroups: ["metrics.k8s.io" ] resources: ["pods" , "nodes" ] verbs: ["get" , "list" , "watch" ] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboard subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: registry.cn-hangzhou.aliyuncs.com/kube-iamges/dashboard:v2.0.0-beta8 imagePullPolicy: IfNotPresent ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates - --namespace=kubernetes-dashboard volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: ports: - port: 8000 targetPort: 8000 selector: k8s-app: dashboard-metrics-scraper --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboard spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: dashboard-metrics-scraper template: metadata: labels: k8s-app: dashboard-metrics-scraper annotations: seccompProfile: 'runtime/default' spec: containers: - name: dashboard-metrics-scraper image: registry.cn-hangzhou.aliyuncs.com/kube-iamges/metrics-scraper:v1.0.1 imagePullPolicy: IfNotPresent ports: - containerPort: 8000 protocol: TCP livenessProbe: httpGet: scheme: HTTP path: / port: 8000 initialDelaySeconds: 30 timeoutSeconds: 30 volumeMounts: - mountPath: /tmp name: tmp-volume securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 serviceAccountName: kubernetes-dashboard nodeSelector: "kubernetes.io/os": linux tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule volumes: - name: tmp-volume emptyDir: {}