生命的火花不是目标,而是对生活的热情。——《心灵奇旅》

写在前面

被问到这个问题,整理相关的笔记

当 kube-proxy 模式设置为 iptables 的时候,通过 SVC 服务发布入口如何到达 Pod?

博文内容涉及:

问题简单介绍

三种常用的服务发布方式到Pod报文路径解析

当前集群为版本为v1.25.1

Demo 演示使用了 ansible

理解不足小伙伴帮忙指正

阅读本文需要了解 iptables

生命的火花不是目标,而是对生活的热情。——《心灵奇旅》

kube-proxy 使用 iptables 模式时,SVC服务发布入口如何到达 Pod ? kube-proxy 是 Kubernetes 中的一个组件,它负责将服务的请求路由到后端 Pod。当使用 iptables 模式时,kube-proxy 会在每个节点上创建 iptables 规则,将服务的请求路由到 后端Pod。

具体来讲,iptable 会创建一些自定义链,这些链最开始通过 iptables 内置链(INPUT,FORWARD)发生跳转,然后在自定义的链中匹配请求的数据包, 执行对应的动作,比如到其他的自定义链跳转,负载均衡操作(基于iptables 负载均衡,使用随机模式,指定概率 ),以及 DNAT 和 SNAT 操作,最后把对 SVC 的 访问发送到 实际的 Pod,这里也涉及到路由查表获取下一跳地址等。

SVC 常见的 服务发布方式有三种(这里不考虑 ExternalName),简单介绍,然后我们依次来跟踪下报文路径,看看其中到底发生了什么

ClusterIP :默认的服务类型。服务暴露在集群内部,并为集群中的其他对象提供了一个稳定的IP地址。只能在集群内部访问。NodePort :将服务暴露在每个节点的IP地址上的一个端口上。外部客户端可以通过节点的IP地址和端口访问服务。可以在集群内部和外部访问。LoadBalancer :将服务暴露在外部负载均衡器上。可以通过负载均衡器的IP地址访问服务。可以在集群内部和外部访问。

在最后开始了解这一部分知识的时候,发现和书里讲的略有不同,好多地方请教了大佬,没有解决,后来才发现是 iptables 版本的问题,不同版本的 iptables 显示的内核规则略有不同,所以这里准备了两个版本的 iptables

不管那种服务发布方式,都可以在集群内部访问,所以这里Demo在所有节点的批量操作,为了方便使用了 ansible,下文不在说明。

查看所有节点的 iptalbes 版本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$ansible -console -i host.yaml Welcome to the ansible console. Type help or ? to list commands. root@all (6)[f:5] 192.168.26.102 | CHANGED | rc=0 >> iptables v1.4.21 192.168.26.101 | CHANGED | rc=0 >> iptables v1.4.21 192.168.26.100 | CHANGED | rc=0 >> iptables v1.8.7 (legacy) 192.168.26.105 | CHANGED | rc=0 >> iptables v1.4.21 192.168.26.106 | CHANGED | rc=0 >> iptables v1.4.21 192.168.26.103 | CHANGED | rc=0 >> iptables v1.4.21 root@all (6)[f:5]

192.168.26.100 节点使用的 v1.8.7 的版本,这个版本的 iptables 的规则可以正常显示,其他节点使用的 v1.4.21 的版本,1.4 版本的 iptables 在内核 iptables 规则显示上有些问题,实际上内核有正确的规则表示,只是iptables 1.4没有正确显示而已。当然这里的显示问题只发生在 DNAT 到 Pod 的时候。

当前集群信息

1 2 3 4 5 6 7 8 9 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$kubectl get nodes NAME STATUS ROLES AGE VERSION vms100.liruilongs.github.io Ready control-plane 10d v1.25.1 vms101.liruilongs.github.io Ready control-plane 10d v1.25.1 vms102.liruilongs.github.io Ready control-plane 10d v1.25.1 vms103.liruilongs.github.io Ready <none> 10d v1.25.1 vms105.liruilongs.github.io Ready <none> 10d v1.25.1 vms106.liruilongs.github.io Ready <none> 10d v1.25.1

先来看下测试环境用的最多的 NodePort

NodePort 找一个当前环境部署的 NodePort

1 2 3 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$kubectl get svc -A | grep NodePort velero minio NodePort 10.98.180.238 <none> 9000:30934/TCP,9099:30450/TCP 69d

通过 任意节点IP+ 30450 是端口我们可以在集群内外访问这个 SVC ,下面为 SVC 的详细信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$kubectl describe svc -n velero minio Name: minio Namespace: velero Labels: component=minio Annotations: <none> Selector: component=minio Type: NodePort IP Family Policy: SingleStack IP Families: IPv4 IP: 10.98.180.238 IPs: 10.98.180.238 Port: api 9000/TCP TargetPort: 9000/TCP NodePort: api 30934/TCP Endpoints: 10.244.169.89:9000,10.244.38.178:9000 Port: console 9099/TCP TargetPort: 9090/TCP NodePort: console 30450/TCP Endpoints: 10.244.169.89:9090,10.244.38.178:9090 Session Affinity: None External Traffic Policy: Cluster Events: <none> ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$

对应的 deploy 的 Pod 副本

1 2 3 4 5 6 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$kubectl get pod -n velero -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES minio-b6d98746c-lxt2c 1/1 Running 0 66m 10.244.38.178 vms103.liruilongs.github.io <none> <none> minio-b6d98746c-m7rzc 1/1 Running 6 (15h ago) 11d 10.244.169.89 vms105.liruilongs.github.io <none> <none> .....

分析一下这个报文访问路径,暴露的端口为 30450, 所以有规则去匹配这个端口,所以先查一下这个端口,通过 iptables-save | grep 30450 命令我们来看一下有哪些链匹配这个端口了,做了哪些动作

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$ansible -console -i host.yaml Welcome to the ansible console. Type help or ? to list commands. root@all (6)[f:5] 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-NODEPORTS -p tcp -m comment --comment "velero/minio:console" -m tcp --dport 30450 -j KUBE-EXT-K6NOPV6C6M2DHO7B 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-NODEPORTS -p tcp -m comment --comment "velero/minio:console" -m tcp --dport 30450 -j KUBE-EXT-K6NOPV6C6M2DHO7B 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-NODEPORTS -p tcp -m comment --comment "velero/minio:console" -m tcp --dport 30450 -j KUBE-EXT-K6NOPV6C6M2DHO7B 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-NODEPORTS -p tcp -m comment --comment "velero/minio:console" -m tcp --dport 30450 -j KUBE-EXT-K6NOPV6C6M2DHO7B 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-NODEPORTS -p tcp -m comment --comment "velero/minio:console" -m tcp --dport 30450 -j KUBE-EXT-K6NOPV6C6M2DHO7B 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-NODEPORTS -p tcp -m comment --comment "velero/minio:console" -m tcp --dport 30450 -j KUBE-EXT-K6NOPV6C6M2DHO7B

通过上面的代码可以发现,在所有的节点都存在一条自定义链 KUBE-NODEPORTS , 用于匹配该端口,然后发生跳转,把数据包给了 KUBE-EXT-K6NOPV6C6M2DHO7B 这条自定义链。

这里有个问题,那么 KUBE-NODEPORTS 这个自定义链是从那条链跳转过来的?

在 iptables 中,用户自定义链的规则和系统预定义的 5 条链(PROROUTING/INPUT/FORWARD/OUTPUT/POSTROUTING)里的规则本质上没有区别。不过自定义的链没有与 netfilter 里的钩子进行绑定,所以它不会自动触发,只能从其他链的规则中跳转过来,类似上面 -j KUBE-EXT-K6NOPV6C6M2DHO7B 这样的操作,当前自定义链跳转到另一条自定义链。

这里根据 netfilter 的钩子顺序,依次看下,数据包最先进来的是 PREROUTING 链,过滤没有发现

1 2 3 4 5 6 7 8 9 10 11 12 13 root@all (6)[f:5] 192.168.26.101 | FAILED | rc=1 >> non-zero return code 192.168.26.105 | FAILED | rc=1 >> non-zero return code 192.168.26.100 | FAILED | rc=1 >> non-zero return code 192.168.26.102 | FAILED | rc=1 >> non-zero return code 192.168.26.106 | FAILED | rc=1 >> non-zero return code 192.168.26.103 | FAILED | rc=1 >> non-zero return code

经过 PREROUTING 链之后这里会有一个路由,可能走 FORWARD 也可能走 INPUT,所以依次看一下

FORWARD 链中没有

1 2 3 4 5 6 7 8 9 10 11 12 13 14 root@all (6)[f:5] 192.168.26.102 | FAILED | rc=1 >> non-zero return code 192.168.26.101 | FAILED | rc=1 >> non-zero return code 192.168.26.106 | FAILED | rc=1 >> non-zero return code 192.168.26.100 | FAILED | rc=1 >> non-zero return code 192.168.26.105 | FAILED | rc=1 >> non-zero return code 192.168.26.103 | FAILED | rc=1 >> non-zero return code root@all (6)[f:5]

INPUT 链中存在跳转,可以看到,在 INPUT链中,-j KUBE-NODEPORTS ,跳转到了我们最开始看到匹配端口的那条链

1 2 3 4 5 6 7 8 9 10 11 12 13 root@all (6)[f:5] 192.168.26.101 | CHANGED | rc=0 >> -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.100 | CHANGED | rc=0 >> -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.102 | CHANGED | rc=0 >> -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.106 | CHANGED | rc=0 >> -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.105 | CHANGED | rc=0 >> -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.103 | CHANGED | rc=0 >> -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

上面的自定义链虽然合理,但是这里有一个问题,注释不对劲,做健康检查的,我们需要的是应该是和 Nodeport 相关的,直接grep -e '-j KUBE-NODEPORTS' ,发现还有一条链,即下面的这条,

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 root@all (6)[f:5] 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS root@all (6)[f:5]

通过KUBE-NODEPORTS这条链可以看到,跳转到了 KUBE-NODEPORTS

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 root@all (6)[f:5] 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

然后在跟 KUBE-NODEPORTS 这条链,发现是从 PREROUTING 这条内置链跳转的

1 2 3 4 5 6 7 8 9 10 11 12 13 14 root@all (6)[f:5] 192.168.26.106 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES 192.168.26.101 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES 192.168.26.102 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES 192.168.26.100 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES 192.168.26.105 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES 192.168.26.103 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES root@all (6)[f:5]

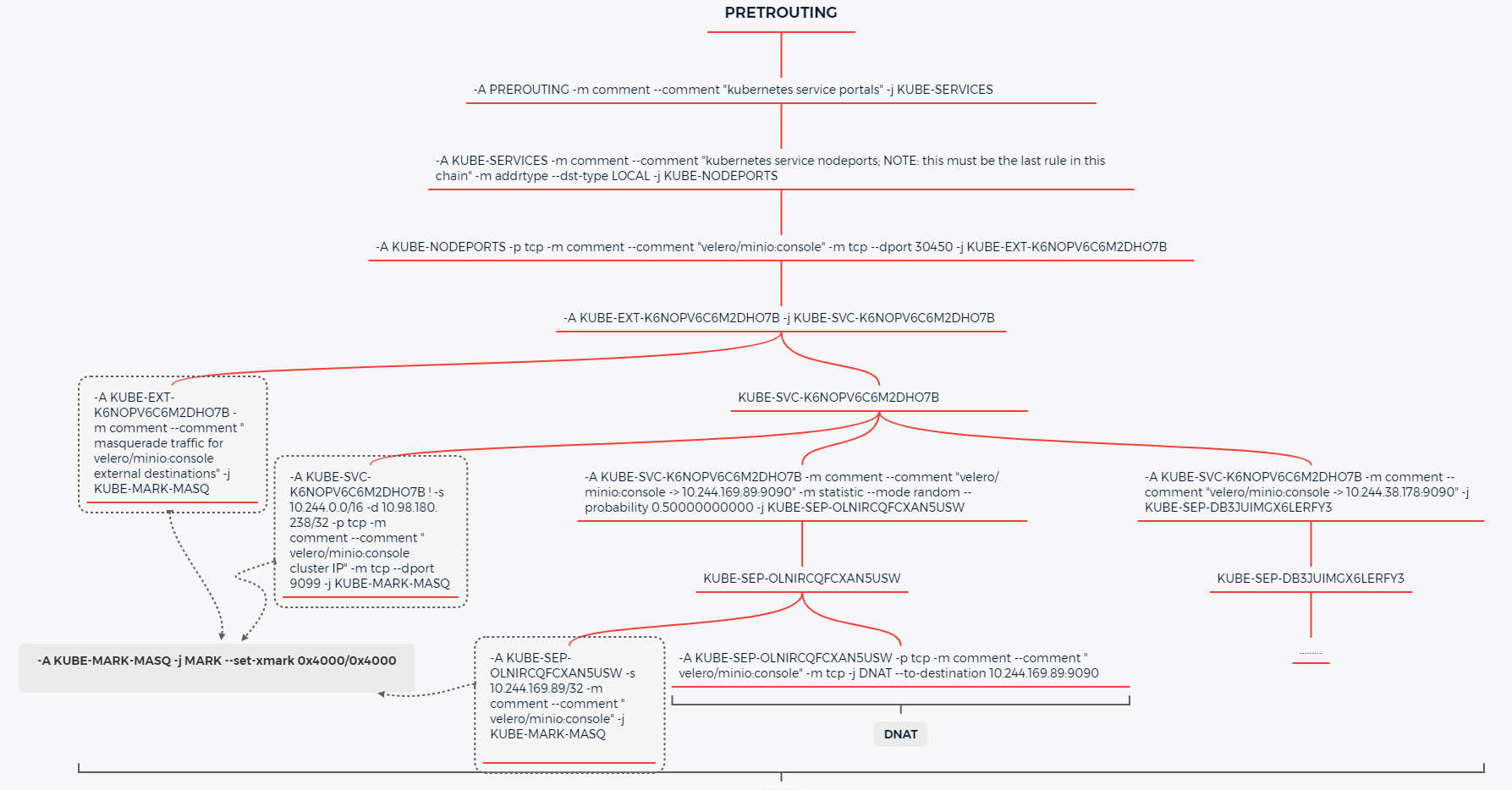

所以由上面可以知道, NodePort 类型的 SVC 在最开始通过 节点IP+NodePort 的方式访问 SVC 的时候,会从每个节点的 iptables 中 内置链 PREROUTING 链 中跳转到链 KUBE-SERVICES ,然后由 KUBE-SERVICES 跳转到 KUBE-NODEPORTS链,数据包在KUBE-NODEPORTS做了端口匹配。

所以可以理解为 NodePort 类型的 SVC 在到达节点后,数据包最先到达 KUBE-NODEPORTS 这条自定义链。

根据最上面的 KUBE-NODEPORTS 链的定义,可以发现跳转到了 KUBE-EXT-K6NOPV6C6M2DHO7B ,所以在来看一下这条链。

通过 iptables-save | grep -e '-A KUBE-EXT-K6NOPV6C6M2DHO7B' 命令查询这条链,可以发现这条链有两条规则,当前只关注第二条 -A KUBE-EXT-K6NOPV6C6M2DHO7B -j KUBE-SVC-K6NOPV6C6M2DHO7B,又发生了跳转,这条链对应 SVC 。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 root@all (6)[f:5] 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-EXT-K6NOPV6C6M2DHO7B -m comment --comment "masquerade traffic for velero/minio:console external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-K6NOPV6C6M2DHO7B -j KUBE-SVC-K6NOPV6C6M2DHO7B 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-EXT-K6NOPV6C6M2DHO7B -m comment --comment "masquerade traffic for velero/minio:console external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-K6NOPV6C6M2DHO7B -j KUBE-SVC-K6NOPV6C6M2DHO7B 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-EXT-K6NOPV6C6M2DHO7B -m comment --comment "masquerade traffic for velero/minio:console external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-K6NOPV6C6M2DHO7B -j KUBE-SVC-K6NOPV6C6M2DHO7B 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-EXT-K6NOPV6C6M2DHO7B -m comment --comment "masquerade traffic for velero/minio:console external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-K6NOPV6C6M2DHO7B -j KUBE-SVC-K6NOPV6C6M2DHO7B 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-EXT-K6NOPV6C6M2DHO7B -m comment --comment "masquerade traffic for velero/minio:console external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-K6NOPV6C6M2DHO7B -j KUBE-SVC-K6NOPV6C6M2DHO7B 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-EXT-K6NOPV6C6M2DHO7B -m comment --comment "masquerade traffic for velero/minio:console external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-K6NOPV6C6M2DHO7B -j KUBE-SVC-K6NOPV6C6M2DHO7B root@all (6)[f:5]

这里对 跳转链进行查询,每个节点有三条规则,当前只关注后两条:

KUBE-SEP-OLNIRCQFCXAN5USW

KUBE-SEP-DB3JUIMGX6LERFY3

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 root@all (6)[f:5] 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-SVC-K6NOPV6C6M2DHO7B ! -s 10.244.0.0/16 -d 10.98.180.238/32 -p tcp -m comment --comment "velero/minio:console cluster IP" -m tcp --dport 9099 -j KUBE-MARK-MASQ -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.169.89:9090" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-OLNIRCQFCXAN5USW -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.38.178:9090" -j KUBE-SEP-DB3JUIMGX6LERFY3 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-SVC-K6NOPV6C6M2DHO7B ! -s 10.244.0.0/16 -d 10.98.180.238/32 -p tcp -m comment --comment "velero/minio:console cluster IP" -m tcp --dport 9099 -j KUBE-MARK-MASQ -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.169.89:9090" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-OLNIRCQFCXAN5USW -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.38.178:9090" -j KUBE-SEP-DB3JUIMGX6LERFY3 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-SVC-K6NOPV6C6M2DHO7B ! -s 10.244.0.0/16 -d 10.98.180.238/32 -p tcp -m comment --comment "velero/minio:console cluster IP" -m tcp --dport 9099 -j KUBE-MARK-MASQ -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.169.89:9090" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-OLNIRCQFCXAN5USW -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.38.178:9090" -j KUBE-SEP-DB3JUIMGX6LERFY3 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-SVC-K6NOPV6C6M2DHO7B ! -s 10.244.0.0/16 -d 10.98.180.238/32 -p tcp -m comment --comment "velero/minio:console cluster IP" -m tcp --dport 9099 -j KUBE-MARK-MASQ -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.169.89:9090" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-OLNIRCQFCXAN5USW -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.38.178:9090" -j KUBE-SEP-DB3JUIMGX6LERFY3 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-SVC-K6NOPV6C6M2DHO7B ! -s 10.244.0.0/16 -d 10.98.180.238/32 -p tcp -m comment --comment "velero/minio:console cluster IP" -m tcp --dport 9099 -j KUBE-MARK-MASQ -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.169.89:9090" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-OLNIRCQFCXAN5USW -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.38.178:9090" -j KUBE-SEP-DB3JUIMGX6LERFY3 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-SVC-K6NOPV6C6M2DHO7B ! -s 10.244.0.0/16 -d 10.98.180.238/32 -p tcp -m comment --comment "velero/minio:console cluster IP" -m tcp --dport 9099 -j KUBE-MARK-MASQ -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.169.89:9090" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-OLNIRCQFCXAN5USW -A KUBE-SVC-K6NOPV6C6M2DHO7B -m comment --comment "velero/minio:console -> 10.244.38.178:9090" -j KUBE-SEP-DB3JUIMGX6LERFY3 root@all (6)[f:5]

这两条链对应集群中SVC的 endpoint 的个数,每条链代表一个 endpoint,其中一条链中的规则 -m statistic --mode random --probability 0.50000000000 表示 iptables 负载均衡使用随机模式,以50%的概率匹配这个规则。

即 KUBE-SVC-K6NOPV6C6M2DHO7B 这条链有一半的几率跳转到 KUBE-SEP-OLNIRCQFCXAN5USW , KUBE-SEP-DB3JUIMGX6LERFY3 任意一条链上。

选择任意一条 KUBE-SEP-XXX 查询 Kube-proxy 创建的对应 iptables 规则

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 root@all (6)[f:5] 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-SEP-OLNIRCQFCXAN5USW -s 10.244.169.89/32 -m comment --comment "velero/minio:console" -j KUBE-MARK-MASQ -A KUBE-SEP-OLNIRCQFCXAN5USW -p tcp -m comment --comment "velero/minio:console" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-SEP-OLNIRCQFCXAN5USW -s 10.244.169.89/32 -m comment --comment "velero/minio:console" -j KUBE-MARK-MASQ -A KUBE-SEP-OLNIRCQFCXAN5USW -p tcp -m comment --comment "velero/minio:console" -m tcp -j DNAT --to-destination 10.244.169.89:9090 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-SEP-OLNIRCQFCXAN5USW -s 10.244.169.89/32 -m comment --comment "velero/minio:console" -j KUBE-MARK-MASQ -A KUBE-SEP-OLNIRCQFCXAN5USW -p tcp -m comment --comment "velero/minio:console" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-SEP-OLNIRCQFCXAN5USW -s 10.244.169.89/32 -m comment --comment "velero/minio:console" -j KUBE-MARK-MASQ -A KUBE-SEP-OLNIRCQFCXAN5USW -p tcp -m comment --comment "velero/minio:console" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-SEP-OLNIRCQFCXAN5USW -s 10.244.169.89/32 -m comment --comment "velero/minio:console" -j KUBE-MARK-MASQ -A KUBE-SEP-OLNIRCQFCXAN5USW -p tcp -m comment --comment "velero/minio:console" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-SEP-OLNIRCQFCXAN5USW -s 10.244.169.89/32 -m comment --comment "velero/minio:console" -j KUBE-MARK-MASQ -A KUBE-SEP-OLNIRCQFCXAN5USW -p tcp -m comment --comment "velero/minio:console" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 root@all (6)[f:5]

这里会发现,iptables 版本不同,显示方式也不同,高版本的 iptables 会把数据包 DNAT 到 Pod 的 IP+端口 : 10.244.169.89:9090

1 -A KUBE-SEP-OLNIRCQFCXAN5USW -p tcp -m comment --comment "velero/minio:console" -m tcp -j DNAT --to-destination 10.244.169.89:9090

低版本的 iptables 会把数据包发送到任意端口,看上去直接把数据包丢弃了,至于为什么会这样,在 github 的一个 issues 下面找到了答案 ,这是 iptables-1.4 的显示问题;内核有正确的规则表示(从iptables 1.8可以持续正确显示的事实可以看出),只是iptables 1.4没有正确显示而已。

感兴趣小伙伴可以在下的 issues 中找到相关描述

https://github.com/kubernetes/kubernetes/issues/114537

1 -A KUBE-SEP-OLNIRCQFCXAN5USW -p tcp -m comment --comment "velero/minio:console" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0

上面的规则最后 DNAT 到 10.244.169.89:9090,不在一个网段,会读取路由表获取下一跳地址,这里我们查看其中一个节点路由信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$ansible -console -i host.yaml --limit 192.168.26.100 Welcome to the ansible console. Type help or ? to list commands. root@all (1)[f:5] 192.168.26.100 | CHANGED | rc=0 >> Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface default 192.168.26.2 0.0.0.0 UG 0 0 0 ens32 10.244.31.64 vms106.liruilon 255.255.255.192 UG 0 0 0 tunl0 10.244.38.128 vms103.liruilon 255.255.255.192 UG 0 0 0 tunl0 10.244.63.64 vms102.liruilon 255.255.255.192 UG 0 0 0 tunl0 10.244.169.64 vms105.liruilon 255.255.255.192 UG 0 0 0 tunl0 10.244.198.0 vms101.liruilon 255.255.255.192 UG 0 0 0 tunl0 10.244.239.128 0.0.0.0 255.255.255.192 U 0 0 0 * 10.244.239.129 0.0.0.0 255.255.255.255 UH 0 0 0 calic2f7856928d 10.244.239.132 0.0.0.0 255.255.255.255 UH 0 0 0 cali349ff1af8c4 link-local 0.0.0.0 255.255.0.0 U 1002 0 0 ens32 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 192.168.26.0 0.0.0.0 255.255.255.0 U 0 0 0 ens32 root@all (1)[f:5] 192.168.26.100 | CHANGED | rc=0 >> 10.244.169.64/26 via 192.168.26.105 dev tunl0 proto bird onlink ......

IP 为 10.244.169.89,通过路由信息查看可以确认,它匹配 10.244.169.64/26 via 192.168.26.105 dev tunl0 proto bird onlink 这一条路由

这条路由信息表示 它匹配 10.244.169.64/26 这个网段(2^(32-掩码位数)-2,10.244.169.64到10.244.169.127)的 IP ,并且说明下一跳地址为 192.168.26.105.

查看所有节点的路由信息,我们发现 192.168.26.105 这个节点路由没有,直接丢弃了相关的包,这是因为下一跳地址即是 192.168.26.105,即 Pod 位于这个节点。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 root@all (6)[f:5] 192.168.26.101 | CHANGED | rc=0 >> 10.244.169.64/26 via 192.168.26.105 dev tunl0 proto bird onlink 192.168.26.102 | CHANGED | rc=0 >> 10.244.169.64/26 via 192.168.26.105 dev tunl0 proto bird onlink 192.168.26.105 | CHANGED | rc=0 >> blackhole 10.244.169.64/26 proto bird 192.168.26.100 | CHANGED | rc=0 >> 10.244.169.64/26 via 192.168.26.105 dev tunl0 proto bird onlink 192.168.26.106 | CHANGED | rc=0 >> 10.244.169.64/26 via 192.168.26.105 dev tunl0 proto bird onlink 192.168.26.103 | CHANGED | rc=0 >> 10.244.169.64/26 via 192.168.26.105 dev tunl0 proto bird onlink root@all (6)[f:5]

所以这里要分两种情况,当 NodePort 访问使用 IP地址 不是 Pod 所在实际的 节点IP时, 会通过路由表跳转过去,当使用Pod所在节点IP 访问,则不需要走路由表获取下一跳地址。

到达目标节点 192.168.26.105 之后,任然要读取路由表,通过下面代码可以看到,通过路由条目 10.244.169.89 dev calia011d753862 scope link 即把对 PodIP 的请求给了虚拟网卡 calia011d753862,这其实是一对 veth pair ,数据包会直接到 对应的 Pod 内的虚拟网卡,也就是我要访问的 Pod。

1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$ansible -console -i host.yaml --limit 192.168.26.105 Welcome to the ansible console. Type help or ? to list commands. root@all (1)[f:5] 192.168.26.105 | CHANGED | rc=0 >> 10.244.169.89 dev calia011d753862 scope link root@all (1)[f:5] 192.168.26.105 | CHANGED | rc=0 >> 408fdb941f59 cf9a50a36310 "/usr/bin/docker-ent…" About an hour ago Up About an hour k8s_minio_minio-b6d98746c-m7rzc_velero_1e9b20fc-1a68-4c2a-bb8f-80072e3c5dab_6 ea6fa7f2174f registry.aliyuncs.com/google_containers/pause:3.8 "/pause" About an hour ago Up About an hour k8s_POD_minio-b6d98746c-m7rzc_velero_1e9b20fc-1a68-4c2a-bb8f-80072e3c5dab_6 root@all (1)[f:5]

我们可直接进入工作节点查看,容器网卡和节点网卡的对应关系。

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$ssh root@192.168.26.105 Last login: Sat Apr 8 10:51:08 2023 from 192.168.26.100 ┌──[root@vms105.liruilongs.github.io]-[~] └─$docker exec -it 408fdb941f59 bash [root@minio-b6d98746c-m7rzc /] 7 [root@minio-b6d98746c-m7rzc /] exit ┌──[root@vms105.liruilongs.github.io]-[~] └─$ip a | grep "7:" 7: calia011d753862@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP

到这一步,数据包由 NodeIP:NodePort 到了 PodIP:PodPort ,实现了 对 Pod 的请求。

请求的相关链路思维导图

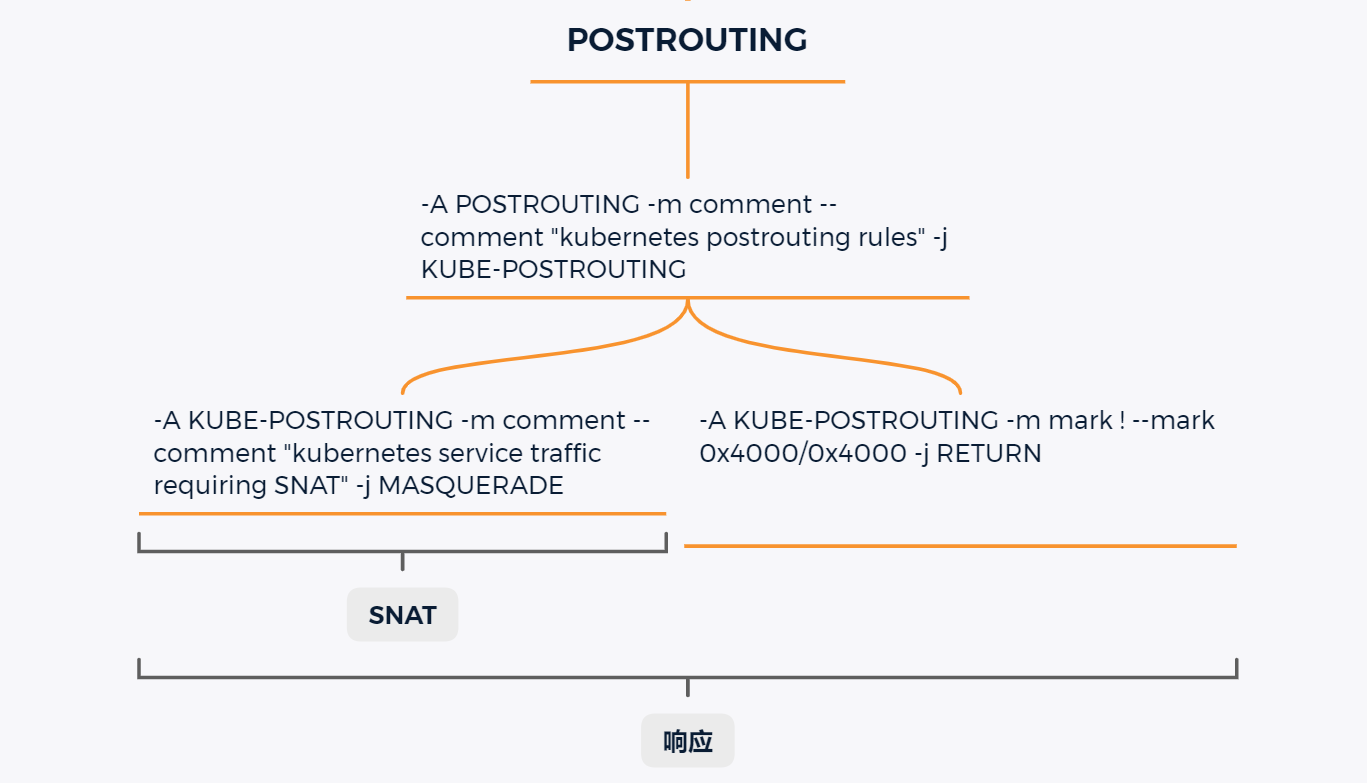

因为在请求的时候,用到了 DNAT ,所以对应的,在回程的时候需要有 SNAT, 保证回程报文能够顺利返回.

为什么 DNAT 之后必须 SNAT?

客户端发起对一个服务的访问,假设源地址和目的地址是(C,VIP),那么客户端期待得到的回程报文的源地址是 VIP,即回程报文的源和目的地址对应该是(VIP,C)。

当网络报文经过网关(Linux 内核的 netfilter,包括 iptables 和 IPVS director)进行一次 DNAT 后,报文的源和目的地址对被修改成了(C,S)。

当报文送到服务端 S 后,服务端一看报文的源地址是 C 便直接把响应报文返回给 C,即此时响应报文的源和目的地址对是(S,C)。

这与客户端期待的报文源和目的地址(VIP,C)对不匹配,客户端收到后会简单丢弃该报文。

回到 kube-proxy 的 自定义 iptables 链分析,在最前面有些自定义链没有看,这部分自定义链最后都跳转到了 KUBE-MARK-MASQ 这里实际上就用于回程的时候做 SNAT。

1 2 3 -A KUBE-EXT-K6NOPV6C6M2DHO7B -m comment --comment "masquerade traffic for velero/minio:console external destinations" -j KUBE-MARK-MASQ -A KUBE-SEP-OLNIRCQFCXAN5USW -s 10.244.169.89/32 -m comment --comment "velero/minio:console" -j KUBE-MARK-MASQ -A KUBE-SVC-K6NOPV6C6M2DHO7B ! -s 10.244.0.0/16 -d 10.98.180.238/32 -p tcp -m comment --comment "velero/minio:console cluster IP" -m tcp --dport 9099 -j KUBE-MARK-MASQ

KUBE-MARK-MASQ 链本质上使用了 iptables 的 MARK 命令。对 KUBE-MARK-MASQ 链中的所有规则设置了 Kubernetes 独有的MARK 标记(0x4000/0x4000),可以通过下面的链看到。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 root@all (6)[f:5] 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000 root@all (6)[f:5]

SNAT 一般发生在内置链POSTROUTING,所以在 节点 POSTROUTING 链中,有一条规则会跳转找 kube-proxy 自定义链 KUBE-POSTROUTING 中,这条链对节点上匹配 MARK 标记(0x4000/0x4000)的数据包在离开节点时进行一次SNAT,即MASQUERADE(用节点IP替换包的源IP)。通过下面的规则我们可以看大

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$ansible -console -i host.yaml Welcome to the ansible console. Type help or ? to list commands. root@all (6)[f:5] 192.168.26.105 | CHANGED | rc=0 >> :KUBE-POSTROUTING - [0:0] -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN -A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0 -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE 192.168.26.101 | CHANGED | rc=0 >> :KUBE-POSTROUTING - [0:0] -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN -A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0 -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE 192.168.26.102 | CHANGED | rc=0 >> :KUBE-POSTROUTING - [0:0] -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN -A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0 -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE 192.168.26.106 | CHANGED | rc=0 >> :KUBE-POSTROUTING - [0:0] -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN -A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0 -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE 192.168.26.100 | CHANGED | rc=0 >> :KUBE-POSTROUTING - [0:0] -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN -A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0 -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully 192.168.26.103 | CHANGED | rc=0 >> :KUBE-POSTROUTING - [0:0] -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN -A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0 -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE root@all (6)[f:5]

自定义链中 POSTROUTING 链中被引用,被标记的包默认直接 做了 MASQUERADE 没有标记的包 直接 RETURN

回程的相关链路思维导图

上面即为整个 NodePort 类型的 SVC 从请求到回程的整个过程

ClusterIP ClusterIP 和 NodePort 类型基本类似

我们来简单看下。选择一个 ClusterIP 类型的 SVC

1 2 3 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$kubectl get svc -A | grep kube-dns kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 71d

查看详细信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$kubectl describe svc kube-dns -n kube-system Name: kube-dns Namespace: kube-system Labels: k8s-app=kube-dns kubernetes.io/cluster-service=true kubernetes.io/name=CoreDNS Annotations: prometheus.io/port: 9153 prometheus.io/scrape: true Selector: k8s-app=kube-dns Type: ClusterIP IP Family Policy: SingleStack IP Families: IPv4 IP: 10.96.0.10 IPs: 10.96.0.10 Port: dns 53/UDP TargetPort: 53/UDP Endpoints: 10.244.239.129:53,10.244.239.132:53 Port: dns-tcp 53/TCP TargetPort: 53/TCP Endpoints: 10.244.239.129:53,10.244.239.132:53 Port: metrics 9153/TCP TargetPort: 9153/TCP Endpoints: 10.244.239.129:9153,10.244.239.132:9153 Session Affinity: None Events: <none>

可以看到有两个 endpoint ,通过deploy 副本数我们也可以看到。

1 2 3 4 5 6 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$kubectl get pod -A | grep dns kube-system coredns-c676cc86f-kpvcj 1/1 Running 18 (16h ago) 71d kube-system coredns-c676cc86f-xqj8d 1/1 Running 17 (16h ago) 71d ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$

当 SVC 类型为 ClusterIP 的时候,只能在集群访问,并且通过 集群IP+Pod端口 的方式进行访问,所以这里我们通过 目标IP和端口来查询 kube-proxy 生成的 iptables 规则

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$ansible -console -i host.yaml Welcome to the ansible console. Type help or ? to list commands. root@all (6)[f:5] 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ root@all (6)[f:5]

通过上面的代码可以确认,生成的第一条链为 KUBE-SERVICES ,它会在每个节点上 匹配 目标IP 为 10.96.0.10/32 端口为 9153 的数据包,动作为 跳转到自定义链 KUBE-SVC-JD5MR3NA4I4DYORP

这条链从那个 内置链跳转过来,我们可以通过下面的方式获取,可以发现,由内置链 PREROUTING 跳转到自定义链 KUBE-SERVICES

1 2 3 4 5 6 7 8 9 10 11 12 13 14 root@all (6)[f:5] 192.168.26.101 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES 192.168.26.102 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES 192.168.26.106 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES 192.168.26.100 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES 192.168.26.105 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES 192.168.26.103 | CHANGED | rc=0 >> -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES root@all (6)[f:5]

继续前面的规则分析,已知 PREROUTING >> KUBE-SERVICES >> KUBE-SVC-JD5MR3NA4I4DYORP,所以看下 KUBE-SVC-JD5MR3NA4I4DYORP 自定义链规则。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 root@all (6)[f:5] 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.129:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-DYDOGONVKJMXNXZO -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.132:9153" -j KUBE-SEP-PURVZ5VLWID76HVQ 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.129:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-DYDOGONVKJMXNXZO -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.132:9153" -j KUBE-SEP-PURVZ5VLWID76HVQ 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.129:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-DYDOGONVKJMXNXZO -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.132:9153" -j KUBE-SEP-PURVZ5VLWID76HVQ 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.129:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-DYDOGONVKJMXNXZO -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.132:9153" -j KUBE-SEP-PURVZ5VLWID76HVQ 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.129:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-DYDOGONVKJMXNXZO -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.132:9153" -j KUBE-SEP-PURVZ5VLWID76HVQ 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.129:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-DYDOGONVKJMXNXZO -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.239.132:9153" -j KUBE-SEP-PURVZ5VLWID76HVQ root@all (6)[f:5]

可以看到这里和 NodePort 一样, 使用 iptables 内置的随机负载均衡,条到两个 KUBE-SEP- 开头的内置链,对应 SVC 中的 endpoint

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 root@all (6)[f:5] 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-SEP-DYDOGONVKJMXNXZO -s 10.244.239.129/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ -A KUBE-SEP-DYDOGONVKJMXNXZO -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.244.239.129:9153 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-SEP-DYDOGONVKJMXNXZO -s 10.244.239.129/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ -A KUBE-SEP-DYDOGONVKJMXNXZO -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-SEP-DYDOGONVKJMXNXZO -s 10.244.239.129/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ -A KUBE-SEP-DYDOGONVKJMXNXZO -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-SEP-DYDOGONVKJMXNXZO -s 10.244.239.129/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ -A KUBE-SEP-DYDOGONVKJMXNXZO -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-SEP-DYDOGONVKJMXNXZO -s 10.244.239.129/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ -A KUBE-SEP-DYDOGONVKJMXNXZO -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-SEP-DYDOGONVKJMXNXZO -s 10.244.239.129/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ -A KUBE-SEP-DYDOGONVKJMXNXZO -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 root@all (6)[f:5]

Ok ,这部分也和 NodePort 一样,这里不在多讲。

1 2 3 4 5 6 7 8 9 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$ansible -console -i host.yaml --limit 192.168.26.100 Welcome to the ansible console. Type help or ? to list commands. root@all (1)[f:5] 192.168.26.100 | CHANGED | rc=0 >> 10.244.239.129 dev calic2f7856928d scope link root@all (1)[f:5]

通过上面的分析可以看到 集群IP 类型的SVC 请求路径为 PREROUTING >> KUBE-SERVICES >> KUBE-SVC-JD5MR3NA4I4DYORP >> KUBE-SEP-XXX >> DNAT 之后下一跳到目标Pod节点

LoadBalancer 最后我们看一下 LoadBalancer 类型的 SVC 的请求过程

1 2 3 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$kubectl get svc | grep LoadBalancer release-name-grafana LoadBalancer 10.96.85.130 192.168.26.223 80:30174/TCP 55d

选择一个当前集群存在的 LB 类型的 SVC。查看集群详细信息,当前 SVC 的 LB 为 192.168.26.223

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$kubectl describe svc release-name-grafana Name: release-name-grafana Namespace: default Labels: app.kubernetes.io/instance=release-name app.kubernetes.io/managed-by=Helm app.kubernetes.io/name=grafana app.kubernetes.io/version=8.3.3 helm.sh/chart=grafana-6.20.5 Annotations: <none> Selector: app.kubernetes.io/instance=release-name,app.kubernetes.io/name=grafana Type: LoadBalancer IP Family Policy: SingleStack IP Families: IPv4 IP: 10.96.85.130 IPs: 10.96.85.130 LoadBalancer Ingress: 192.168.26.223 Port: http-web 80/TCP TargetPort: 3000/TCP NodePort: http-web 30174/TCP Endpoints: 10.244.31.123:3000 Session Affinity: None External Traffic Policy: Cluster Events: <none> ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$

直接通过 LB 对应的 IP 查询。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$ansible -console -i host.yaml Welcome to the ansible console. Type help or ? to list commands. root@all (6)[f:5] 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 192.168.26.223/32 -p tcp -m comment --comment "default/release-name-grafana:http-web loadbalancer IP" -m tcp --dport 80 -j KUBE-EXT-XR6NUEPTVOUAGEC3 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 192.168.26.223/32 -p tcp -m comment --comment "default/release-name-grafana:http-web loadbalancer IP" -m tcp --dport 80 -j KUBE-EXT-XR6NUEPTVOUAGEC3 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 192.168.26.223/32 -p tcp -m comment --comment "default/release-name-grafana:http-web loadbalancer IP" -m tcp --dport 80 -j KUBE-EXT-XR6NUEPTVOUAGEC3 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 192.168.26.223/32 -p tcp -m comment --comment "default/release-name-grafana:http-web loadbalancer IP" -m tcp --dport 80 -j KUBE-EXT-XR6NUEPTVOUAGEC3 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 192.168.26.223/32 -p tcp -m comment --comment "default/release-name-grafana:http-web loadbalancer IP" -m tcp --dport 80 -j KUBE-EXT-XR6NUEPTVOUAGEC3 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-SERVICES -d 192.168.26.223/32 -p tcp -m comment --comment "default/release-name-grafana:http-web loadbalancer IP" -m tcp --dport 80 -j KUBE-EXT-XR6NUEPTVOUAGEC3 root@all (6)[f:5]

通过上面的代码可以看到,由 KUBE-SERVICES 到了 KUBE-EXT-XR6NUEPTVOUAGEC3 ,这里虽然和 集群IP一样第一条自定义链都是 KUBE-SERVICES,但是集群IP由 KUBE-SERVICES 直接到了以 KUBE-SVC-XXX 开头的链,这里到了以 KUBE-EXT-XXX开头的链。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 root@all (6)[f:5] 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-EXT-XR6NUEPTVOUAGEC3 -m comment --comment "masquerade traffic for default/release-name-grafana:http-web external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-XR6NUEPTVOUAGEC3 -j KUBE-SVC-XR6NUEPTVOUAGEC3 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-EXT-XR6NUEPTVOUAGEC3 -m comment --comment "masquerade traffic for default/release-name-grafana:http-web external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-XR6NUEPTVOUAGEC3 -j KUBE-SVC-XR6NUEPTVOUAGEC3 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-EXT-XR6NUEPTVOUAGEC3 -m comment --comment "masquerade traffic for default/release-name-grafana:http-web external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-XR6NUEPTVOUAGEC3 -j KUBE-SVC-XR6NUEPTVOUAGEC3 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-EXT-XR6NUEPTVOUAGEC3 -m comment --comment "masquerade traffic for default/release-name-grafana:http-web external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-XR6NUEPTVOUAGEC3 -j KUBE-SVC-XR6NUEPTVOUAGEC3 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-EXT-XR6NUEPTVOUAGEC3 -m comment --comment "masquerade traffic for default/release-name-grafana:http-web external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-XR6NUEPTVOUAGEC3 -j KUBE-SVC-XR6NUEPTVOUAGEC3 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-EXT-XR6NUEPTVOUAGEC3 -m comment --comment "masquerade traffic for default/release-name-grafana:http-web external destinations" -j KUBE-MARK-MASQ -A KUBE-EXT-XR6NUEPTVOUAGEC3 -j KUBE-SVC-XR6NUEPTVOUAGEC3

由 KUBE-EXT-XR6NUEPTVOUAGEC3 到了 KUBE-SVC-XR6NUEPTVOUAGEC3 ,这里多的 KUBE-EXT-XXXX 的链和 NodePort 类型的SVC一样。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 root@all (6)[f:5] 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-SVC-XR6NUEPTVOUAGEC3 ! -s 10.244.0.0/16 -d 10.96.85.130/32 -p tcp -m comment --comment "default/release-name-grafana:http-web cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ -A KUBE-SVC-XR6NUEPTVOUAGEC3 -m comment --comment "default/release-name-grafana:http-web -> 10.244.31.123:3000" -j KUBE-SEP-RLRDAS77BUJVWRRK 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-SVC-XR6NUEPTVOUAGEC3 ! -s 10.244.0.0/16 -d 10.96.85.130/32 -p tcp -m comment --comment "default/release-name-grafana:http-web cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ -A KUBE-SVC-XR6NUEPTVOUAGEC3 -m comment --comment "default/release-name-grafana:http-web -> 10.244.31.123:3000" -j KUBE-SEP-RLRDAS77BUJVWRRK 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-SVC-XR6NUEPTVOUAGEC3 ! -s 10.244.0.0/16 -d 10.96.85.130/32 -p tcp -m comment --comment "default/release-name-grafana:http-web cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ -A KUBE-SVC-XR6NUEPTVOUAGEC3 -m comment --comment "default/release-name-grafana:http-web -> 10.244.31.123:3000" -j KUBE-SEP-RLRDAS77BUJVWRRK 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-SVC-XR6NUEPTVOUAGEC3 ! -s 10.244.0.0/16 -d 10.96.85.130/32 -p tcp -m comment --comment "default/release-name-grafana:http-web cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ -A KUBE-SVC-XR6NUEPTVOUAGEC3 -m comment --comment "default/release-name-grafana:http-web -> 10.244.31.123:3000" -j KUBE-SEP-RLRDAS77BUJVWRRK 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-SVC-XR6NUEPTVOUAGEC3 ! -s 10.244.0.0/16 -d 10.96.85.130/32 -p tcp -m comment --comment "default/release-name-grafana:http-web cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ -A KUBE-SVC-XR6NUEPTVOUAGEC3 -m comment --comment "default/release-name-grafana:http-web -> 10.244.31.123:3000" -j KUBE-SEP-RLRDAS77BUJVWRRK 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-SVC-XR6NUEPTVOUAGEC3 ! -s 10.244.0.0/16 -d 10.96.85.130/32 -p tcp -m comment --comment "default/release-name-grafana:http-web cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ -A KUBE-SVC-XR6NUEPTVOUAGEC3 -m comment --comment "default/release-name-grafana:http-web -> 10.244.31.123:3000" -j KUBE-SEP-RLRDAS77BUJVWRRK

之后到了 KUBE-SEP 开头的链,这部分三种类型的 SVC 都一样,不多介绍。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 root@all (6)[f:5] 192.168.26.106 | CHANGED | rc=0 >> -A KUBE-SEP-RLRDAS77BUJVWRRK -s 10.244.31.123/32 -m comment --comment "default/release-name-grafana:http-web" -j KUBE-MARK-MASQ -A KUBE-SEP-RLRDAS77BUJVWRRK -p tcp -m comment --comment "default/release-name-grafana:http-web" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 --persistent 192.168.26.105 | CHANGED | rc=0 >> -A KUBE-SEP-RLRDAS77BUJVWRRK -s 10.244.31.123/32 -m comment --comment "default/release-name-grafana:http-web" -j KUBE-MARK-MASQ -A KUBE-SEP-RLRDAS77BUJVWRRK -p tcp -m comment --comment "default/release-name-grafana:http-web" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 --persistent 192.168.26.102 | CHANGED | rc=0 >> -A KUBE-SEP-RLRDAS77BUJVWRRK -s 10.244.31.123/32 -m comment --comment "default/release-name-grafana:http-web" -j KUBE-MARK-MASQ -A KUBE-SEP-RLRDAS77BUJVWRRK -p tcp -m comment --comment "default/release-name-grafana:http-web" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 --persistent 192.168.26.101 | CHANGED | rc=0 >> -A KUBE-SEP-RLRDAS77BUJVWRRK -s 10.244.31.123/32 -m comment --comment "default/release-name-grafana:http-web" -j KUBE-MARK-MASQ -A KUBE-SEP-RLRDAS77BUJVWRRK -p tcp -m comment --comment "default/release-name-grafana:http-web" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 --persistent 192.168.26.100 | CHANGED | rc=0 >> -A KUBE-SEP-RLRDAS77BUJVWRRK -s 10.244.31.123/32 -m comment --comment "default/release-name-grafana:http-web" -j KUBE-MARK-MASQ -A KUBE-SEP-RLRDAS77BUJVWRRK -p tcp -m comment --comment "default/release-name-grafana:http-web" -m tcp -j DNAT --to-destination 10.244.31.123:3000 192.168.26.103 | CHANGED | rc=0 >> -A KUBE-SEP-RLRDAS77BUJVWRRK -s 10.244.31.123/32 -m comment --comment "default/release-name-grafana:http-web" -j KUBE-MARK-MASQ -A KUBE-SEP-RLRDAS77BUJVWRRK -p tcp -m comment --comment "default/release-name-grafana:http-web" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 --persistent root@all (6)[f:5] ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$ip route | grep 31 10.244.31.64/26 via 192.168.26.106 dev tunl0 proto bird onlink ┌──[root@vms100.liruilongs.github.io]-[~/ansible] └─$

关于 SVC 三种不同类型的服务发布到Pod路劲解析就和小伙伴们分享到这,简单总结下:

kube-proxy 在 iptables 模式下, 三种服务类型都是通过 iptables 的自定义链匹配数据包,做 DNAT 后,根据每个节点上的路由表获取下一跳地址,到达 目标 POD 所在节点,在通过路由选择的方式,把数据包发到节点和 Pod 对应的一对 veth pair 虚拟网卡进行通信。POSTROUTING 内置链中添加自定义链,对数据包做 SNAT。 地址伪装,修改源IP,这里需要注意下 在自定义链中:

iptables 自定义链对应三种服务略有不同:

NodePort : PREROUTING >> KUBE-SERVICES >> KUBE-NODEPORTS >> KUBE-EXT-XXXXXX >> KUBE-SVC-XXXXXX >> KUBE-SEP-XXXXX >> DANT

ClusterIP: PREROUTING >> KUBE-SERVICES >> KUBE-SVC-XXXXX >> KUBE-SEP-XXX >> DNAT

LoadBalancer:PREROUTING >> KUBE-SERVICES >> KUBE-EXT-XXXXXX >> KUBE-SVC-XXXXXX >> KUBE-SEP-XXXXX >> DANT

博文部分内容参考 © 文中涉及参考链接内容版权归原作者所有,如有侵权请告知 :)

https://github.com/kubernetes/kubernetes/issues/114537

《 Kubernetes 网络权威指南:基础、原理与实践》

© 2018-2023 liruilonger@gmail.com , All rights reserved. 保持署名-非商用-相同方式共享(CC BY-NC-SA 4.0)