生活的意义就是学着真实的活下去,生命的意义就是寻找生活的意义 —–山河已无恙

写在前面

嗯,准备考 cka证书,报了个班,花了好些钱,一定要考过去。

这篇博客是报班听课后整理的笔记,适合温习。

博客内容涉及 secret,configmap 的创建使用

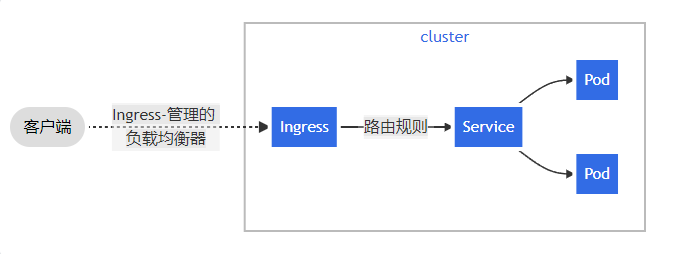

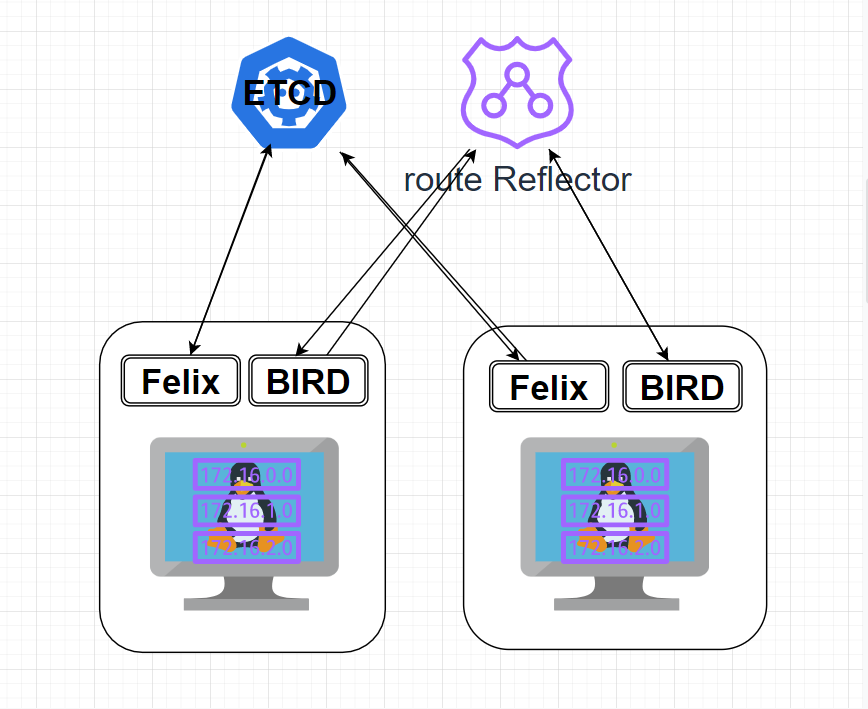

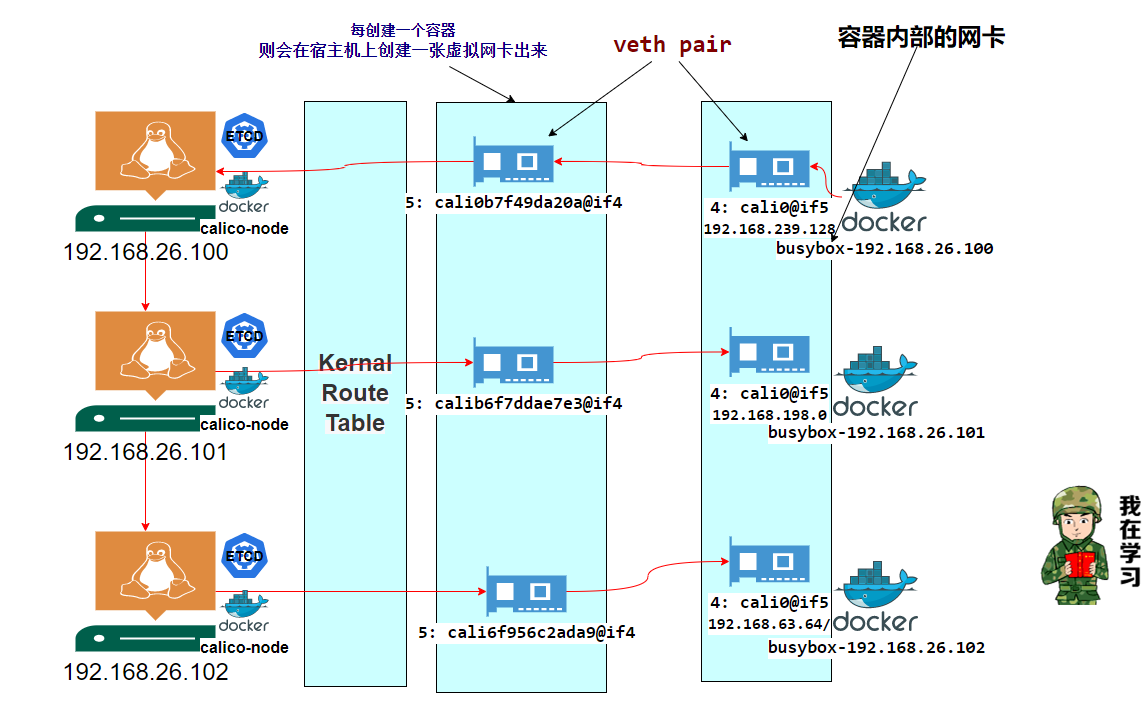

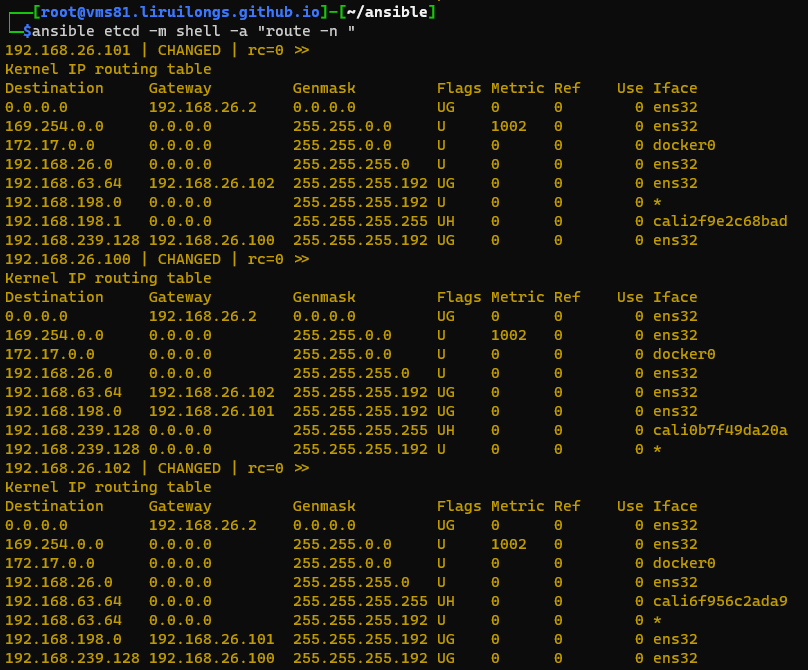

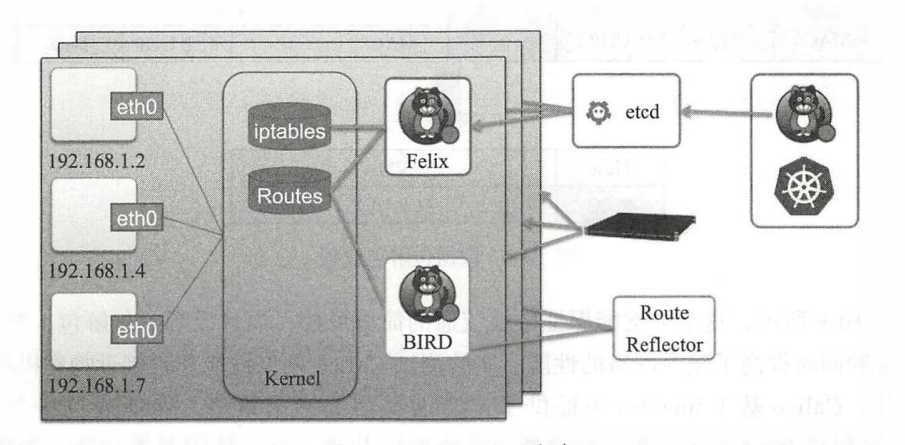

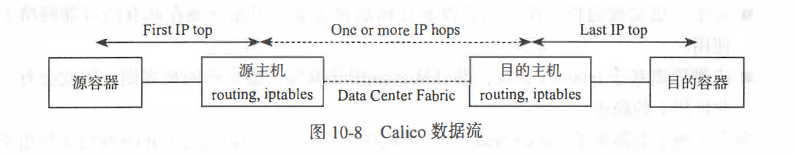

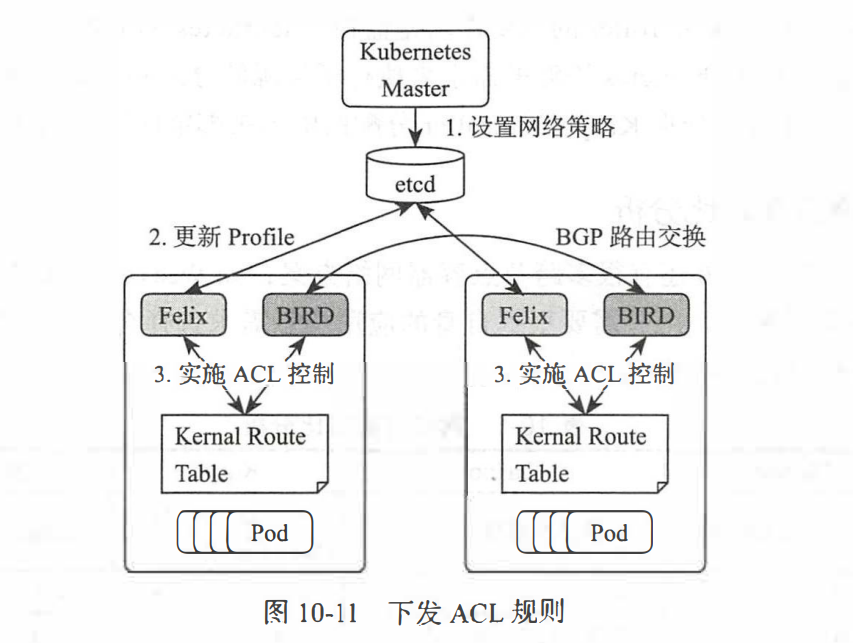

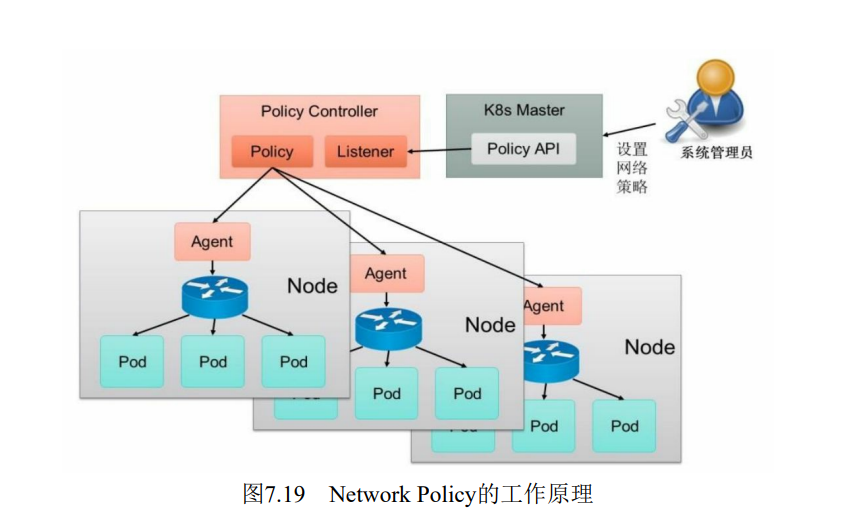

deployment的创建手动自动扩缩容,镜像滚动更新回滚等。deamonset,ReplicationController,RepliSet的创建使用pod 的健康检测,服务可用性检测Service的创建,服务发现,服务发布Ingress等使用Calico实现K8s集群中容器的跨主机通信

使用NetworkPolicy实现K8s网路策略

生活的意义就是学着真实的活下去,生命的意义就是寻找生活的意义 —–山河已无恙

密码配置管理 多个镜像的密码管理

环境准备 相关镜像拉去

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible node -m ping 192.168.26.83 | SUCCESS => { "ansible_facts" : { "discovered_interpreter_python" : "/usr/bin/python" }, "changed" : false , "ping" : "pong" } 192.168.26.82 | SUCCESS => { "ansible_facts" : { "discovered_interpreter_python" : "/usr/bin/python" }, "changed" : false , "ping" : "pong" } ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible node -m shell -a "docker pull hub.c.163.com/library/mysql:latest" ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible node -m shell -a "docker pull hub.c.163.com/library/wordpress:latest"

学习环境准备,新建一个命名空间

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$dir =k8s-secret-create;mkdir $dir ;cd $dir ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get ns NAME STATUS AGE default Active 66d kube-node-lease Active 66d kube-public Active 66d kube-system Active 66d liruilong Active 65d liruilong-pod-create Active 58d liruilong-volume-create Active 16d

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl create ns liruilong-secret-create namespace/liruilong-secret-create created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl config set-context $(kubectl config current-context) --namespace=liruilong-secret-create Context "context1" modified. ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl config view | grep namespace namespace: default namespace: liruilong-secret-create namespace: kube-system ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl config get-contexts CURRENT NAME CLUSTER AUTHINFO NAMESPACE cluster1 default * context1 cluster1 kubernetes-admin1 liruilong-secret-create context2 kube-system ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$

mysqlpod创建一个mysql镜像

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl run mysqlpod --image=hub.c.163.com/library/mysql:latest --image-pull-policy=IfNotPresent --dry-run=client -o yaml >mysqlpod.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$vim mysqlpod.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$cat mysqlpod.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: mysqlpod name: mysqlpod spec: containers: - image: hub.c.163.com/library/mysql:latest imagePullPolicy: IfNotPresent name: mysqlpod resources: {} env: - name: MYSQL_ROOT_PASSWORD value: liruilong dnsPolicy: ClusterFirst restartPolicy: Always status: {} ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl apply -f mysqlpod.yaml pod/mysqlpod created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES mysqlpod 1/1 Running 0 19s 10.244.171.190 vms82.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$

客户端测试

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$yum -y install mariadb ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$mysql -uroot -pliruilong -h10.244.171.190 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MySQL connection id is 3 Server version: 5.7.18 MySQL Community Server (GPL) Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help . Type '\c' to clear the current input statement. MySQL [(none)]> quit Bye ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$

secret 创建 secret 1 2 3 4 5 6 7 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl describe pod mysqlpod | grep -A 2 Env Environment: MYSQL_ROOT_PASSWORD: liruilong Mounts: ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$

上面的密码我们使用的是明文,但是在实际的生产环境使用明文是很危险的一件事,所以我们需要加密处理

secret主要用于密码的保存 通过键值对的方式创建。直接指定键值对,或者存放中secret中

命令行创建secret 查看secret

1 2 3 4 5 6 7 8 9 10 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get sa NAME SECRETS AGE default 1 46m ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get secrets NAME TYPE DATA AGE default-token-7q2qj kubernetes.io/service-account-token 3 46m ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$

创建secret

1 2 3 4 5 6 7 8 9 10 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl create secret generic mysecl --from-literal=mysqlpassword=liruilong --from-literal=rqpassword=rq secret/mysecl created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get secrets NAME TYPE DATA AGE default-token-7q2qj kubernetes.io/service-account-token 3 49m mysecl Opaque 2 9s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$

Secret有三种类型:

Secret有三种类型

Opaque

kubernetes.io/dockerconfigjson

kubernetes.io/service-account-token

查看详细信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl describe secrets mysecl Name: mysecl Namespace: liruilong-secret-create Labels: <none> Annotations: <none> Type: Opaque Data ==== mysqlpassword: 9 bytes rqpassword: 2 bytes ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$ ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get secrets mysecl -o yaml apiVersion: v1 data: mysqlpassword: bGlydWlsb25n rqpassword: cnE= kind: Secret metadata: creationTimestamp: "2021-12-12T02:45:20Z" name: mysecl namespace: liruilong-secret-create resourceVersion: "1594980" selfLink: /api/v1/namespaces/liruilong-secret-create/secrets/mysecl uid: 05a99a7c-c7f0-48ac-9f67-32eb52ed1558 type : Opaque

也可以通过解密得到想要的密码

1 2 3 4 5 6 ┌──[root@vms81.liruilongs.github.io]-[~] └─$echo bGlydWlsb25n | base64 -d liruilong┌──[root@vms81.liruilongs.github.io]-[~] ┌──[root@vms81.liruilongs.github.io]-[~] └─$echo cnE= | base64 -d rq┌──[root@vms81.liruilongs.github.io]-[~]

直接解密

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get secrets mysecl -o jsonpath='{.data.mysqlpassword}' | base64 -d liruilong┌──[root@vms81.liruilongs.github.io]-[~] └─$

文件方式创建secret 一般使用命令行的方式创建,很少使用文件的方式创建 帐密信息文件

1 2 3 4 5 6 7 8 9 10 11 12 liruilong┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$tee cat env.txt <<-'EOF' > user=liruilong > password1=redhat > password2=redhat > EOF user=liruilong password1=redhat password2=redhat ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$ls env.txt mysqlpod.yaml

通过--from-env-file文件创建 文件中的键值对

1 2 3 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl create secret generic mysecret1 --from-env-file=env.txt secret/mysecret1 created

查看创建信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get secrets NAME TYPE DATA AGE default-token-7q2qj kubernetes.io/service-account-token 3 6h34m mysecl Opaque 2 5h45m mysecret1 Opaque 3 32s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl describe secrets mysecret1 Name: mysecret1 Namespace: liruilong-secret-create Labels: <none> Annotations: <none> Type: Opaque Data ==== password1: 6 bytes password2: 6 bytes user: 9 bytes ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$

也可以通过--from-file来创建,文件名是键,文件内容为值

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl create secret generic mysecret2 --from-file=/etc/hosts secret/mysecret2 created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get secrets mysecret2 -o jsonpath='{.data.hosts}' | base64 -d 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.26.81 vms81.liruilongs.github.io vms81 192.168.26.82 vms82.liruilongs.github.io vms82 192.168.26.83 vms83.liruilongs.github.io vms83 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$

使用 secret secret可以通过卷的方式使用,也可以通过变量的方式使用

1 2 3 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl create secret generic mysecl --from-literal=mysqlpassword=liruilong --from-literal=rqpassword=rq secret/mysecl created

这里我们使用前面的创建的这个secret

变量的方式使用secret yaml文件中变量设置密码通过secret的方式:mysqlpodargs.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: mysqlpod name: mysqlpod spec: containers: - image: hub.c.163.com/library/mysql:latest imagePullPolicy: IfNotPresent name: mysqlpod resources: {} env: - name: MYSQL_ROOT_PASSWORD valueFrom: secretKeyRef: name: mysecl key: mysqlpassword dnsPolicy: ClusterFirst restartPolicy: Always status: {}

创建pod

1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl apply -f mysqlpodargs.yaml pod/mysqlpod created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES mysqlpod 0/1 ContainerCreating 0 15s <none> vms83.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES mysqlpod 1/1 Running 0 21s 10.244.70.19 vms83.liruilongs.github.io <none> <none>

测试登录

1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$mysql -uroot -h10.244.70.19 -pliruilong Welcome to the MariaDB monitor. Commands end with ; or \g. Your MySQL connection id is 3 Server version: 5.7.18 MySQL Community Server (GPL) Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help . Type '\c' to clear the current input statement. MySQL [(none)]>

以卷的方式使用secret pod文件nginxsecret.yaml,一般不这样使用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: nginxsecret name: nginxsecret spec: volumes: - name: v1 secret: secretName: mysecl containers: - image: nginx imagePullPolicy: IfNotPresent name: nginxsecret resources: {} volumeMounts: - name: v1 mountPath: /data dnsPolicy: ClusterFirst restartPolicy: Always status: {}

创建pod会把加密的文件信息写在pod里的/data目录下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl apply -f nginxsecret.yaml pod/nginxsecret created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxsecret 1/1 Running 0 41s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl exec -it nginxsecret -- bash root@nginxsecret:/ bin data docker-entrypoint.d etc lib media opt root sbin sys usr boot dev docker-entrypoint.sh home lib64 mnt proc run srv tmp var root@nginxsecret:/ mysqlpassword rqpassword root@nginxsecret:/data exit

如过添加了subPath,会把指定的信息写入文件:nginxsecretsubPth.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: nginxsecret name: nginxsecret spec: volumes: - name: v1 secret: secretName: mysecl containers: - image: nginx imagePullPolicy: IfNotPresent name: nginxsecret resources: {} volumeMounts: - name: v1 mountPath: /data/mysql subPath: mysqlpassword dnsPolicy: ClusterFirst restartPolicy: Always status: {}

创建pod测试

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl apply -f nginxsecretsubPth.yaml pod/nginxsecret created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxsecret 1/1 Running 0 16s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl exec -it nginxsecret -- bash root@nginxsecret:/ liruilongroot@nginxsecret:/ exit

configmap(cm) 也是以键值对的方式使用,一般通过命名行的方式创建,也可以通过卷和变量的方式使用 config和secret的区别主要是secret加密了,而config没有加密

configmap(cm)的创建 通过命令行的方式创建 1 2 3 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl create configmap myconfig1 --from-literal=user=liruilong --from-literal=password=liruilong configmap/myconfig1 created

查看创建信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get cm NAME DATA AGE kube-root-ca.crt 1 7h32m myconfig1 2 81s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl describe configmaps myconfig1 Name: myconfig1 Namespace: liruilong-secret-create Labels: <none> Annotations: <none> Data ==== password: ---- liruilong user: ---- liruilong BinaryData ==== Events: <none>

1 2 3 4 5 6 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get cm myconfig1 -o jsonpath='{.data.password}' liruilong┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get cm myconfig1 -o jsonpath='{.data.user}' liruilong┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$

通过文件的方式创建 微服务中常用的配置文件信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$cat application.properties server.port=8081 server.servlet.session.timeout=30m server.servlet.context-path=/ server.tomcat.uri-encoding=utf-8 server.tomcat.threads.max=500 server.tomcat.basedir=/home/sang/tmp spring.freemarker.allow-request-override=false spring.freemarker.allow-session-override=true spring.freemarker.cache=false spring.freemarker.charset=UTF-8 spring.freemarker.check-template-location=true spring.freemarker.content-type=text/html spring.freemarker.expose-request-attributes=false spring.freemarker.expose-session-attributes=true spring.freemarker.suffix=.ftl spring.freemarker.template-loader-path=classpath:/templates/ spring.thymeleaf.cache=true spring.thymeleaf.check-template=true spring.thymeleaf.check-template-location=true spring.thymeleaf.encoding=UTF-8 spring.thymeleaf.prefix=classpath:/templates/ spring.thymeleaf.servlet.content-type=text/html spring.thymeleaf.suffix=.html spring.redis.database=0 spring.redis.host=192.168.66.130 spring.redis.port=6379 spring.redis.password=123@456 spring.redis.lettuce.pool.max-active= spring.redis.lettuce.pool.max-idle= spring.redis.lettuce.pool.max-wait= spring.redis.lettuce.pool.min-idle= spring.redis.lettuce.shutdown-timeout= spring.redis.jedis.pool.max-active=8 spring.redis.jedis.pool.max-idle=8 spring.redis.jedis.pool.max-wait=-1ms spring.redis.jedis.pool.min-idle=0

1 2 3 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl create configmap myconfig2 --from-file=./application.properties configmap/myconfig2 created

1 2 3 4 5 6 7 8 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$cat env.txt user=liruilong password1=redhat password2=redhat ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl create configmap myconfig3 --from-env-file=./env.txt configmap/myconfig3 created

查看创建的全部configMap

1 2 3 4 5 6 7 8 9 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get cm NAME DATA AGE kube-root-ca.crt 1 8h myconfig1 2 37m myconfig2 1 9m16s myconfig3 3 18s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$

configmap(cm)的使用 用卷的方式使用configmap configmap通常使用卷的方式使用,一般可以在微服务中抽离配置文件: ngingconfig.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: nginxsecret name: nginxsecret spec: volumes: - name: config configMap: name: myconfig2 containers: - image: nginx imagePullPolicy: IfNotPresent name: nginxsecret resources: {} volumeMounts: - name: config mountPath: /app/java readOnly: true dnsPolicy: ClusterFirst restartPolicy: Always status: {}

测试,查看配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl apply -f ngingconfig.yaml pod/nginxsecret created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxsecret 1/1 Running 0 40s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl exec -it nginxsecret -- bash root@nginxsecret:/ application.properties root@nginxsecret:/app/java server.port=8081 server.servlet.session.timeout=30m server.servlet.context-path=/ server.tomcat.uri-encoding=utf-8 .........

修改kube-prosy的负载策略,修改其中的 mode: " iptables/ipvs",修改之后需要重启对应的pod

1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get cm -n kube-system NAME DATA AGE calico-config 4 66d coredns 1 66d extension-apiserver-authentication 6 66d kube-proxy 2 66d kube-root-ca.crt 1 66d kubeadm-config 2 66d kubelet-config-1.21 1 66d kubelet-config-1.22 1 54d ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl edit cm kube-proxy -n kube-system

变量的方式使用configMap 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get configmaps myconfig3 -o yaml apiVersion: v1 data: password1: redhat password2: redhat user: liruilong kind: ConfigMap metadata: creationTimestamp: "2021-12-12T10:04:42Z" name: myconfig3 namespace: liruilong-secret-create resourceVersion: "1645816" selfLink: /api/v1/namespaces/liruilong-secret-create/configmaps/myconfig3 uid: b75bef31-05a8-4d67-8d5c-dea42aedea67

编写pod资源文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: mysqlpod name: mysqlpod spec: containers: - image: hub.c.163.com/library/mysql:latest imagePullPolicy: IfNotPresent name: mysqlpod resources: {} env: - name: MYSQL_ROOT_PASSWORD valueFrom: configMapKeyRef: name: myconfig3 key: user dnsPolicy: ClusterFirst restartPolicy: Always status: {}

创建pod

1 2 3 4 5 6 7 8 9 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl apply -f mysqlpodconfig.yaml pod/mysqlpod created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES mysqlpod 1/1 Running 0 3m19s 10.244.171.130 vms82.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$

测试使用

1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-secret-create] └─$mysql -uroot -h10.244.171.130 -pliruilong Welcome to the MariaDB monitor. Commands end with ; or \g. Your MySQL connection id is 3 Server version: 5.7.18 MySQL Community Server (GPL) Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help . Type '\c' to clear the current input statement. MySQL [(none)]>

deployment Deployment是Kubernetes v1.2引入的新概念,引入的目的是为了更好地解决Pod的编排问题。为此, Deployment在内部使用了Replica Set来实现目的,无论从Deployment的作用与目的、它的YAML定义,还是从它的具体命令行操作来看,我们都可以把它看作RC的一次升级,两者的相似度超过90%。

Deployment相对于RC的一个最大升级是我们可以随时知道当前Pod“部署”的进度。实际上由于一个Pod的创建、调度、绑定节点及在目标Node上启动对应的容器这一完整过程需要一定的时间,所以我们期待系统启动N个Pod副本的目标状态,实际上是一个连续变化的“部署过程”导致的最终状态。

以下是 Deployments 的典型用例:

Deployments 的典型用例

创建 Deployment 以将 ReplicaSet 上线。 ReplicaSet 在后台创建 Pods。 检查 ReplicaSet 的上线状态,查看其是否成功。

通过更新 Deployment 的 PodTemplateSpec,声明 Pod 的新状态 。 新的ReplicaSet会被创建,Deployment 以受控速率将

如果 Deployment 的当前状态不稳定,回滚到较早的 Deployment 版本。 每次回滚都会更新 Deployment 的修订版本。

扩大 Deployment 规模以承担更多负载。

暂停 Deployment 以应用对 PodTemplateSpec 所作的多项修改, 然后恢复其执行以启动新的上线版本。

使用 Deployment 状态 来判定上线过程是否出现停滞。

清理较旧的不再需要的 ReplicaSet 。

ReplicaSet ReplicaSet 的目的是维护一组在任何时候都处于运行状态的 Pod 副本的稳定集合。 因此,它通常用来保证给定数量的、完全相同的 Pod 的可用性。

ReplicaSet 的工作原理 RepicaSet 是通过一组字段来定义的,包括:

一个用来识别可获得的 Pod 的集合的选择算符(选择器)、

一个用来标明应该维护的副本个数的数值、

一个用来指定应该创建新 Pod 以满足副本个数条件时要使用的 Pod 模板等等。

学习环境准备

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$dir =k8s-deploy-create ;mkdir $dir ;cd $dir ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get ns NAME STATUS AGE default Active 78m kube-node-lease Active 79m kube-public Active 79m kube-system Active 79m ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl create ns liruilong-deploy-create namespace/liruilong-deploy-create created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl config set-context $(kubectl config current-context) --namespace=liruilong-deploy-create Context "kubernetes-admin@kubernetes" modified. ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl config view | grep namespace namespace: liruilong-deploy-create ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$

用yaml文件创建deployment 1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl create deployment web1 --image=nginx --dry-run=client -o yaml > ngixndeplog.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$vim ngixndeplog.yaml

ngixndeplog.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: web1 name: web1 spec: replicas: 3 selector: matchLabels: app: web1 strategy: {} template: metadata: creationTimestamp: null labels: app: web1 spec: containers: - image: nginx name: nginx ports: - containerPort: 80 resources: {} status: {}

1 2 3 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl apply -f ngixndeplog.yaml deployment.apps/web1 created

查看创建的deployment

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get deploy -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR web1 2/3 3 2 37s nginx nginx app=web1

查看创建的replicaSet

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get rs -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR web1-66b5fd9bc8 3 3 3 4m28s nginx nginx app=web1,pod-template-hash=66b5fd9bc8

查看创建的pod

1 2 3 4 5 6 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web1-66b5fd9bc8-2wpkr 1/1 Running 0 3m45s 10.244.171.131 vms82.liruilongs.github.io <none> <none> web1-66b5fd9bc8-9lxh2 1/1 Running 0 3m45s 10.244.171.130 vms82.liruilongs.github.io <none> <none> web1-66b5fd9bc8-s9w7g 1/1 Running 0 3m45s 10.244.70.3 vms83.liruilongs.github.io <none> <none>

Pod的扩容和缩容 在实际生产系统中,我们经常会遇到某个服务需要扩容的场景,也可能会遇到由于资源紧张或者工作负载降低而需要减少服务实例数量的场景。此时我们可以利用DeploymentRC的Scale机制来完成这些工作。Kubermetes对Pod的扩容和缩容操作提供了手动和自动两种模式,

手动模式通过执行kubecl scale命令对一个Deploymen/RC进行Pod副本数量的设置,即可一键完成。

自动模式则需要用户根据某个性能指标或者自定义业务指标,并指定Pod副本数量的范围,系统将自动在这个范围内根据性能指标的变化进行调整。

手动模式 命令行修改kubectl scale deployment web1 --replicas=2

1 2 3 4 5 6 7 8 9 10 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl scale deployment web1 --replicas=2 deployment.apps/web1 scaled ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web1-66b5fd9bc8-2wpkr 1/1 Running 0 8m19s 10.244.171.131 vms82.liruilongs.github.io <none> <none> web1-66b5fd9bc8-s9w7g 1/1 Running 0 8m19s 10.244.70.3 vms83.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$

edit的方式修改kubectl edit deployment web1

1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl edit deployment web1 deployment.apps/web1 edited ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web1-66b5fd9bc8-2wpkr 1/1 Running 0 9m56s 10.244.171.131 vms82.liruilongs.github.io <none> <none> web1-66b5fd9bc8-9lnds 0/1 ContainerCreating 0 6s <none> vms82.liruilongs.github.io <none> <none> web1-66b5fd9bc8-s9w7g 1/1 Running 0 9m56s 10.244.70.3 vms83.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$

修改yaml文件方式

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$sed -i 's/replicas: 3/replicas: 2/' ngixndeplog.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl apply -f ngixndeplog.yaml deployment.apps/web1 configured ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES web1-66b5fd9bc8-2wpkr 1/1 Running 0 12m 10.244.171.131 vms82.liruilongs.github.io <none> <none> web1-66b5fd9bc8-s9w7g 1/1 Running 0 12m 10.244.70.3 vms83.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$

HPA自动模式伸缩 从Kubernetes v1.1版本开始,新增了名为Horizontal Pod Autoscaler (HPA)的控制器,用于实现基于CPU使用率进行自动Pod扩容和缩容的功能。

HPA控制器基于Master的kube-controller-manager服务启动参数–horizontal-pod-autoscaler-sync-period定义的时长(默认值为30s),周期性地监测目标Pod的CPU使用率,并在满足条件时对ReplicationController或Deployment中的Pod副本数量进行调整,以符合用户定义的平均Pod CPU使用率。Pod CPU使用率来源于metric server 组件,所以需要预先安装好metric server .

HPA 可以基于内存,CPU,并发量来动态伸缩

创建HPA时可以使用kubectl autoscale 命令进行快速创建或者使用yaml配置文件进行创建。在创建HPA之前,需要已经存在一个DeploymentRC对象,并且该Deployment/RC中的Pod必须定义resources.requests.cpu的资源请求值,如果不设置该值,则metric server 将无法采集到该Pod的CPU使用情况,会导致HPA无法正常工作。

设置metric server 监控

1 2 3 4 5 6 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+] └─$kubectl top nodes NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% vms81.liruilongs.github.io 401m 20% 1562Mi 40% vms82.liruilongs.github.io 228m 11% 743Mi 19% vms83.liruilongs.github.io 221m 11% 720Mi 18%

配置HPA 设置副本数是最小2个,最大10个,CPU超过80

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl autoscale deployment web1 --min=2 --max=10 --cpu-percent=80 horizontalpodautoscaler.autoscaling/web1 autoscaled ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get hpa NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE web1 Deployment/web1 <unknown>/80% 2 10 2 15s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl delete hpa web1 horizontalpodautoscaler.autoscaling "web1" deleted ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$

解决当前cpu的使用量为unknown,这个占时没有解决办法 ngixndeplog.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: web1 name: web1 spec: replicas: 2 selector: matchLabels: app: web1 strategy: {} template: metadata: creationTimestamp: null labels: app: web1 spec: containers: - image: nginx name: nginx ports: - containerPort: 80 resources: limits: cpu: 500m requests: cpu: 200m

测试HPA 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$cat ngixndeplog.yaml apiVersion: apps/v1 kind: Deployment metadata: creationTimestamp: null labels: app: nginx name: nginxdep spec: replicas: 2 selector: matchLabels: app: nginx strategy: {} template: metadata: creationTimestamp: null labels: app: nginx spec: containers: - image: nginx name: web resources: requests: cpu: 100m restartPolicy: Always

设置HPAkubectl autoscale deployment nginxdep --max=5 --cpu-percent=50

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGE nginxdep 2/2 2 2 8m8s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl autoscale deployment nginxdep --max=5 --cpu-percent=50 horizontalpodautoscaler.autoscaling/nginxdep autoscaled ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginxdep-645bf755b9-27hzn 1/1 Running 0 97s 10.244.171.140 vms82.liruilongs.github.io <none> <none> nginxdep-645bf755b9-cb57p 1/1 Running 0 97s 10.244.70.10 vms83.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get hpa -o wide NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE nginxdep Deployment/nginxdep <unknown>/50% 1 5 2 21s

创建一个svc,然后模拟调用

1 2 3 4 5 6 7 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl expose --name=nginxsvc deployment nginxdep --port=80 service/nginxsvc exposed ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get svc -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR nginxsvc ClusterIP 10.104.147.65 <none> 80/TCP 9s app=nginx

测试svc的调用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible 192.168.26.83 -m shell -a "curl http://10.104.147.65 " 192.168.26.83 | CHANGED | rc=0 >> <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/" >nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/" >nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 615 100 615 0 0 304k 0 --:--:-- --:--:-- --:--:-- 600k

安装http-tools(IP压力测试工具包),模拟调用

1 2 3 4 5 6 7 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible 192.168.26.83 -m shell -a "yum install httpd-tools -y" ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible 192.168.26.83 -m shell -a "ab -t 600 -n 1000000 -c 1000 http://10.104.147.65/ " & [1] 123433 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

观察pod的变化

deployment-健壮性测试 1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl scale deployment nginxdep --replicas=3 deployment.apps/nginxdep scaled ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginxdep-645bf755b9-27hzn 1/1 Running 1 (3m19s ago) 47m 10.244.171.141 vms82.liruilongs.github.io <none> <none> nginxdep-645bf755b9-4dkpp 1/1 Running 0 30s 10.244.171.144 vms82.liruilongs.github.io <none> <none> nginxdep-645bf755b9-vz5qt 1/1 Running 0 30s 10.244.70.11 vms83.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

把vms83.liruilongs.github.io关机,等一段时间就会发现,pod都会在vms82.liruilongs.github.io上运行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get nodes NAME STATUS ROLES AGE VERSION vms81.liruilongs.github.io Ready control-plane,master 47h v1.22.2 vms82.liruilongs.github.io Ready <none> 47h v1.22.2 vms83.liruilongs.github.io NotReady <none> 47h v1.22.2 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginxdep-645bf755b9-27hzn 1/1 Running 1 (20m ago) 64m 10.244.171.141 vms82.liruilongs.github.io <none> <none> nginxdep-645bf755b9-4dkpp 1/1 Running 0 17m 10.244.171.144 vms82.liruilongs.github.io <none> <none> nginxdep-645bf755b9-9hzf2 1/1 Running 0 9m48s 10.244.171.145 vms82.liruilongs.github.io <none> <none> nginxdep-645bf755b9-vz5qt 1/1 Terminating 0 17m 10.244.70.11 vms83.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginxdep-645bf755b9-27hzn 1/1 Running 1 (27m ago) 71m 10.244.171.141 vms82.liruilongs.github.io <none> <none> nginxdep-645bf755b9-4dkpp 1/1 Running 0 24m 10.244.171.144 vms82.liruilongs.github.io <none> <none> nginxdep-645bf755b9-9hzf2 1/1 Running 0 16m 10.244.171.145 vms82.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl top pods NAME CPU(cores) MEMORY(bytes) nginxdep-645bf755b9-27hzn 0m 4Mi nginxdep-645bf755b9-4dkpp 0m 1Mi nginxdep-645bf755b9-9hzf2 0m 1Mi ┌──[root@vms81.liruilongs.github.io]-[~] └─$

当vms83.liruilongs.github.io重新启动,pod并不会返回到vms83.liruilongs.github.io上运行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get nodes NAME STATUS ROLES AGE VERSION vms81.liruilongs.github.io Ready control-plane,master 2d v1.22.2 vms82.liruilongs.github.io Ready <none> 2d v1.22.2 vms83.liruilongs.github.io Ready <none> 2d v1.22.2 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginxdep-645bf755b9-27hzn 1/1 Running 1 (27m ago) 71m 10.244.171.141 vms82.liruilongs.github.io <none> <none> nginxdep-645bf755b9-4dkpp 1/1 Running 0 24m 10.244.171.144 vms82.liruilongs.github.io <none> <none> nginxdep-645bf755b9-9hzf2 1/1 Running 0 16m 10.244.171.145 vms82.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~] └─$

deployment-更新回滚镜像 当集群中的某个服务需要升级时,我们需要停止目前与该服务相关的所有Pod,然后下载新版本镜像并创建新的Pod,如果集群规模比较大,则这个工作就变成了一个挑战,而且先全部停止然后逐步升级的方式会导致较长时间的服务不可用。

Kuberetes提供了滚动升级功能来解决上述问题。如果Pod是通过Deployment创建的,则用户可以在运行时修改Deployment的Pod定义(spec.template)或镜像名称,并应用到Deployment对象上,系统即可完成Deployment的自动更新操作。如果在更新过程中发生了错误,则还可以通过回滚(Rollback)操作恢复Pod的版本。 环境准备

1 2 3 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl scale deployment nginxdep --replicas=5 deployment.apps/nginxdep scaled

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible node -m shell -a "docker pull nginx:1.9" ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible node -m shell -a "docker pull nginx:1.7.9"

通过deployment-更新镜像 现在pod镜像需要更新为 Nginx l.9,我们可以通 kubectl set image deployment/deploy名字 容器名字=nginx:1.9 --record为 Deployment设置新的镜像名称

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl set image deployment/nginxdep web=nginx:1.9 --record Flag --record has been deprecated, --record will be removed in the future deployment.apps/nginxdep image updated ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxdep-59d7c6b6f-6hdb8 0/1 ContainerCreating 0 26s nginxdep-59d7c6b6f-bd5z2 0/1 ContainerCreating 0 26s nginxdep-59d7c6b6f-jb2j7 1/1 Running 0 26s nginxdep-59d7c6b6f-jd5df 0/1 ContainerCreating 0 4s nginxdep-645bf755b9-27hzn 1/1 Running 1 (51m ago) 95m nginxdep-645bf755b9-4dkpp 1/1 Running 0 48m nginxdep-645bf755b9-hkcqx 1/1 Running 0 18m ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxdep-59d7c6b6f-6hdb8 0/1 ContainerCreating 0 51s nginxdep-59d7c6b6f-bd5z2 1/1 Running 0 51s nginxdep-59d7c6b6f-jb2j7 1/1 Running 0 51s nginxdep-59d7c6b6f-jd5df 0/1 ContainerCreating 0 29s nginxdep-59d7c6b6f-prfzd 0/1 ContainerCreating 0 14s nginxdep-645bf755b9-27hzn 1/1 Running 1 (51m ago) 96m nginxdep-645bf755b9-4dkpp 1/1 Running 0 49m ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxdep-59d7c6b6f-6hdb8 1/1 Running 0 2m28s nginxdep-59d7c6b6f-bd5z2 1/1 Running 0 2m28s nginxdep-59d7c6b6f-jb2j7 1/1 Running 0 2m28s nginxdep-59d7c6b6f-jd5df 1/1 Running 0 2m6s nginxdep-59d7c6b6f-prfzd 1/1 Running 0 111s

可以通过age的时间看到nginx的版本由latest滚动升级到 1.9的版本然后到1.7.9版本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl set image deployment/nginxdep web=nginx:1.7.9 --record Flag --record has been deprecated, --record will be removed in the future deployment.apps/nginxdep image updated ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxdep-66587778f6-9jqfz 1/1 Running 0 4m37s nginxdep-66587778f6-jbsww 1/1 Running 0 5m2s nginxdep-66587778f6-lwkpg 1/1 Running 0 5m1s nginxdep-66587778f6-tmd4l 1/1 Running 0 4m41s nginxdep-66587778f6-v9f28 1/1 Running 0 5m2s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl describe pods nginxdep-66587778f6-jbsww | grep Image: Image: nginx:1.7.9

可以使用kubectl rollout pause deployment nginxdep来暂停更新操作,完成复杂更新

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl rollout pause deployment nginxdep deployment.apps/nginxdep paused ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get deployments -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR nginxdep 5/5 5 5 147m web nginx:1.7.9 app=nginx ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl set image deployment/nginxdep web=nginx deployment.apps/nginxdep image updated ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl rollout history deployment nginxdep deployment.apps/nginxdep REVISION CHANGE-CAUSE 4 kubectl set image deployment/nginxdep web=nginx:1.9 --record=true 5 kubectl set image deployment/nginxdep web=nginx:1.9 --record=true 6 kubectl set image deployment/nginxdep web=nginx:1.9 --record=true ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl rollout resume deployment nginxdep deployment.apps/nginxdep resumed

deployment-回滚 这个和git基本类似。可以回滚到任意版本ID

查看版本历史记录

1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl rollout history deployment nginxdep deployment.apps/nginxdep REVISION CHANGE-CAUSE 1 kubectl set image deployment/nginxdep nginxdep=nginx:1.9 --record=true 2 kubectl set image deployment/nginxdep web=nginx:1.9 --record=true 3 kubectl set image deployment/nginxdep web=nginx:1.7.9 --record=true ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get deployments nginxdep -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR nginxdep 5/5 5 5 128m web nginx:1.7.9 app=nginx

回滚版本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl rollout undo deployment nginxdep --to-revision=2 deployment.apps/nginxdep rolled back ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxdep-59d7c6b6f-ctdh2 0/1 ContainerCreating 0 6s nginxdep-59d7c6b6f-dk67c 0/1 ContainerCreating 0 6s nginxdep-59d7c6b6f-kr74k 0/1 ContainerCreating 0 6s nginxdep-66587778f6-9jqfz 1/1 Running 0 23m nginxdep-66587778f6-jbsww 1/1 Running 0 23m nginxdep-66587778f6-lwkpg 1/1 Running 0 23m nginxdep-66587778f6-v9f28 1/1 Running 0 23m ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxdep-59d7c6b6f-7j9z7 0/1 ContainerCreating 0 37s nginxdep-59d7c6b6f-ctdh2 1/1 Running 0 59s nginxdep-59d7c6b6f-dk67c 1/1 Running 0 59s nginxdep-59d7c6b6f-f2sb4 0/1 ContainerCreating 0 21s nginxdep-59d7c6b6f-kr74k 1/1 Running 0 59s nginxdep-66587778f6-jbsww 1/1 Running 0 24m ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$

查看版本详细信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl rollout history deployment nginxdep --revision=4 deployment.apps/nginxdep with revision Pod Template: Labels: app=nginx pod-template-hash=59d7c6b6f Annotations: kubernetes.io/change-cause: kubectl set image deployment/nginxdep web=nginx:1.9 --record=true Containers: web: Image: nginx:1.9 Port: <none> Host Port: <none> Requests: cpu: 100m Environment: <none> Mounts: <none> Volumes: <none>

滚动更新的相关参数 maxSurge :在升级过程中一次升级几个,即新旧的副本不超过 (1+ 设置的值)%

maxUnavailable :在升级过程中,pod不可用个数,一次性删除多少个pod

可以通过命令修改

1 2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl edit deployments nginxdep

默认值

1 2 3 4 5 6 7 8 9 10 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$kubectl get deployments nginxdep -o yaml | grep -A 5 strategy: strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type : RollingUpdate template: ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-deploy-create] └─$

Recreate (重建): spec.strategy.type:Recreate,表示 Deployment在更新Pod时,会先杀掉所有正在运行的Pod,然后创建新的PodRolligUpdate (滚动更新): spec.strategy.type:RollingUupdate,表示Deployment会以滚动更新的方式来逐个更新Pod.同时,可以通过设置spec.strategy.rollingUuplate下的两个参数(maxUnavailable和maxSurge)来控制滚动更新的过程。

daemonset DaemonSet 确保全部节点上运行一个 Pod 的副本。 当有节点加入集群时, 也会为他们新增一个 Pod 。 当有节点从集群移除时,这些Pod也会被回收。删除DaemonSet将会删除它创建的所有 Pod。即单实例,每个节点只跑一个pod

DaemonSet应用场景DaemonSet 的一些典型用法:

在每个Node上运行一个GlusterFS存储或者Ceph存储的Daemon进程

在每个Node上运行一个日志采集程序,例如Fluentd或者Logstach.

在每个Node上运行一个性能监控程序,采集该Node的运行性能数据,例如PrometheusNode Exporter, collectd, New Relic agent或者Ganglia gmond等。

一种简单的用法是为每种类型的守护进程在所有的节点上都启动一个 DaemonSet。 一个稍微复杂的用法是为同一种守护进程部署多个 DaemonSet;每个具有不同的标志, 并且对不同硬件类型具有不同的内存、CPU 要求。这句话不太懂,以后再研究下

DaemonSet的Pod调度策略与RC类似,除了使用系统内置的算法在每台Node上进行调度,也可以在Pod的定义中使用NodeSelector或NodeAffinity来指定满足条件的Node范围进行调度。

学习环境准备

1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$dir =k8s-daemonset-create;mkdir $dir ;cd $dir ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl config current-context kubernetes-admin@kubernetes ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl create ns liruilong-dameonset-create namespace/liruilong-dameonset-create created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl config set-context $(kubectl config current-context) --namespace=liruilong-daemonset-create Context "kubernetes-admin@kubernetes" modified.

kubeadm中的deamonset 使用kubeadm安装的k8s环境中是使用的DaemonSet,calico是网路相关,所有节点都需要有,kube-proxy是代理相关,用于负载均衡等操作

1 2 3 4 5 6 7 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl get ds -A NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE kube-system calico-node 3 3 3 3 3 kubernetes.io/os=linux 4d23h kube-system kube-proxy 3 3 3 3 3 kubernetes.io/os=linux 4d23h ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$

Demonset的创建 这里要说明的是deamonset和deployment只有在kind的位置不同,可以拷贝deployment的模板进行修改

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 apiVersion: apps/v1 kind: DaemonSet metadata: creationTimestamp: null labels: app: myds1 name: myds1 spec: selector: matchLabels: app: myds1 template: metadata: creationTimestamp: null labels: app: myds1 spec: containers: - image: nginx name: nginx resources: {}

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl create deployment myds1 --image=nginx --dry-run=client -o yaml > deamonset.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$vim deamonset.yaml

我们创建一个deamonset,当前只有master节点和一个node节点正常工作 因为master节点有污点,所以会发现这里只允许一个deamonset

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl apply -f deamonset.yaml daemonset.apps/myds1 created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl get nodes NAME STATUS ROLES AGE VERSION vms81.liruilongs.github.io Ready control-plane,master 4d22h v1.22.2 vms82.liruilongs.github.io Ready <none> 4d22h v1.22.2 vms83.liruilongs.github.io NotReady <none> 4d22h v1.22.2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE myds1-fbmhp 1/1 Running 0 35s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$

节点加入集群自动新增节点pod 我们在启动一台机器,会发现,新加入的vms83.liruilongs.github.io节点自动运行一个deamonset

1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl get nodes NAME STATUS ROLES AGE VERSION vms81.liruilongs.github.io Ready control-plane,master 4d22h v1.22.2 vms82.liruilongs.github.io Ready <none> 4d22h v1.22.2 vms83.liruilongs.github.io Ready <none> 4d22h v1.22.2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE myds1-prldj 1/1 Running 0 6m13s myds1-pvwm4 1/1 Running 0 10m

Deamonset污点节点加入pod 下面我们从新修改deamonset资源文件,容忍有污点的节点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 apiVersion: apps/v1 kind: DaemonSet metadata: creationTimestamp: null labels: app: myds1 name: myds1 spec: selector: matchLabels: app: myds1 template: metadata: creationTimestamp: null labels: app: myds1 spec: terminationGracePeriodSeconds: 0 tolerations: - operator: Exists containers: - image: nginx name: nginx resources: {}

1 2 3 4 5 6 7 8 9 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl apply -f deamonsettaint.yaml daemonset.apps/myds1 created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE myds1-8tsnz 0/1 ContainerCreating 0 3s myds1-9l6d9 0/1 ContainerCreating 0 3s myds1-wz44b 0/1 ContainerCreating 0 3s

会发现每个节点都运行一个deamontset相关的pod

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl describe nodes vms81.liruilongs.github.io | grep Taint Taints: node-role.kubernetes.io/master:NoSchedule ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl run pod1 --image=nginx --dry-run=server -o yaml | grep -A 6 terminationGracePeriodSeconds terminationGracePeriodSeconds: 30 tolerations: - effect: NoExecute key: node.kubernetes.io/not-ready operator: Exists tolerationSeconds: 300 - effect: NoExecute

当然,如果我们不想所以有污点的节点都运行deamonset相关pod,那么我们可以使用另一种指定kye的方式

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 apiVersion: apps/v1 kind: DaemonSet metadata: creationTimestamp: null labels: app: myds1 name: myds1 spec: selector: matchLabels: app: myds1 template: metadata: creationTimestamp: null labels: app: myds1 spec: terminationGracePeriodSeconds: 0 tolerations: - operator: Exists key: node-role.kubernetes.io/master effect: "NoSchedule" containers: - image: nginx name: nginx resources: {}

会发现deamonset可以运行在master和node节点

1 2 3 4 5 6 7 8 9 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl apply -f deamonsetaint.yaml daemonset.apps/myds1 created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-daemonset-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE myds1-f7hbb 0/1 ContainerCreating 0 4s myds1-hksp9 0/1 ContainerCreating 0 4s myds1-nnmzp 0/1 ContainerCreating 0 4s

Daemon Pods 是如何被调度的 DaemonSet 确保所有符合条件的节点都运行该 Pod 的一个副本。 通常,运行 Pod 的节点由 Kubernetes 调度器选择。 不过,DaemonSet Pods 由 DaemonSet 控制器创建和调度。这就带来了以下问题:

ScheduleDaemonSetPods 允许您使用默认调度器而不是 DaemonSet 控制器来调度 DaemonSets, 方法是将 NodeAffinity 条件而不是 .spec.nodeName 条件添加到 DaemonSet Pods。 默认调度器接下来将 Pod 绑定到目标主机。

如果 DaemonSet Pod 的节点亲和性配置已存在,则被替换 (原始的节点亲和性配置在选择目标主机之前被考虑)。 DaemonSet 控制器仅在创建或修改DaemonSet Pod时执行这些操作, 并且不会更改 DaemonSet 的 spec.template。

1 2 3 4 5 6 7 8 nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchFields: - key: metadata.name operator: In values: - target-host-name

与 Daemon Pods 通信

DaemonSet 中的 Pod 进行通信的几种可能模式如下:

推送(Push):配置 DaemonSet 中的 Pod,将更新发送到另一个服务,例如统计数据库。 这些服务没有客户端。

NodeIP 和已知端口:DaemonSet 中的 Pod 可以使用 hostPort,从而可以通过节点 IP 访问到 Pod。客户端能通过某种方法获取节点 IP 列表,并且基于此也可以获取到相应的端口。

DNS:创建具有相同 Pod 选择算符的 无头服务, 通过使用 endpoints 资源或从 DNS 中检索到多个 A 记录来发现 DaemonSet。

Service:创建具有相同 Pod 选择算符的服务,并使用该服务随机访问到某个节点上的 守护进程(没有办法访问到特定节点)。

更新 DaemonSet 如果节点的标签被修改,DaemonSet 将立刻向新匹配上的节点添加 Pod, 同时删除不匹配的节点上的 Pod。你可以修改 DaemonSet 创建的 Pod。不过并非 Pod 的所有字段都可更新。 下次当某节点(即使具有相同的名称)被创建时,DaemonSet 控制器还会使用最初的模板。

你可以修改 DaemonSet 创建的 Pod。不过并非 Pod 的所有字段都可更新。 下次当某节点(即使具有相同的名称)被创建时,DaemonSet 控制器还会使用最初的模板。

您可以删除一个 DaemonSet。如果使用 kubectl 并指定 –cascade=orphan 选项, 则 Pod 将被保留在节点上。接下来如果创建使用相同选择算符的新 DaemonSet, 新的 DaemonSet 会收养已有的 Pod。 如果有 Pod 需要被替换,DaemonSet 会根据其 updateStrategy 来替换。

DaemonSet 的替代方案 init 脚本 直接在节点上启动守护进程(例如使用 init、upstartd 或 systemd)的做法当然是可行的。 不过,基于 DaemonSet 来运行这些进程有如下一些好处:

像所运行的其他应用一样,DaemonSet 具备为守护进程提供监控和日志管理的能力。

为守护进程和应用所使用的配置语言和工具(如 Pod 模板、kubectl)是相同的。

在资源受限的容器中运行守护进程能够增加守护进程和应用容器的隔离性。 然而,这一点也可以通过在容器中运行守护进程但却不在 Pod 中运行之来实现。 例如,直接基于 Docker 启动。

裸 Pod 直接创建 Pod并指定其运行在特定的节点上也是可以的。 然而,DaemonSet 能够替换由于任何原因(例如节点失败、例行节点维护、内核升级) 而被删除或终止的 Pod。 由于这个原因,你应该使用 DaemonSet 而不是单独创建 Pod。

静态 Pod 通过在一个指定的、受 kubelet 监视的目录下编写文件来创建 Pod 也是可行的。 这类 Pod 被称为静态 Pod。 不像 DaemonSet,静态 Pod 不受 kubectl 和其它 Kubernetes API 客户端管理。 静态 Pod 不依赖于 API 服务器,这使得它们在启动引导新集群的情况下非常有用。 此外,静态 Pod 在将来可能会被废弃。

Deployments DaemonSet 与 Deployments 非常类似, 它们都能创建 Pod,并且 Pod 中的进程都不希望被终止(例如,Web 服务器、存储服务器)。建议为无状态的服务使用 Deployments,比如前端服务。 对这些服务而言,对副本的数量进行扩缩容、平滑升级,比精确控制 Pod 运行在某个主机上要重要得多。 当需要 Pod 副本总是运行在全部或特定主机上,并且当该 DaemonSet 提供了节点级别的功能(允许其他 Pod 在该特定节点上正确运行)时, 应该使用 DaemonSet。

例如,网络插件通常包含一个以 DaemonSet 运行的组件。 这个 DaemonSet 组件确保它所在的节点的集群网络正常工作

1 2 3 4 5 6 7 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl get ds -A NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE kube-system calico-node 3 3 3 3 3 kubernetes.io/os=linux 4d23h kube-system kube-proxy 3 3 3 3 3 kubernetes.io/os=linux 4d23h ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$

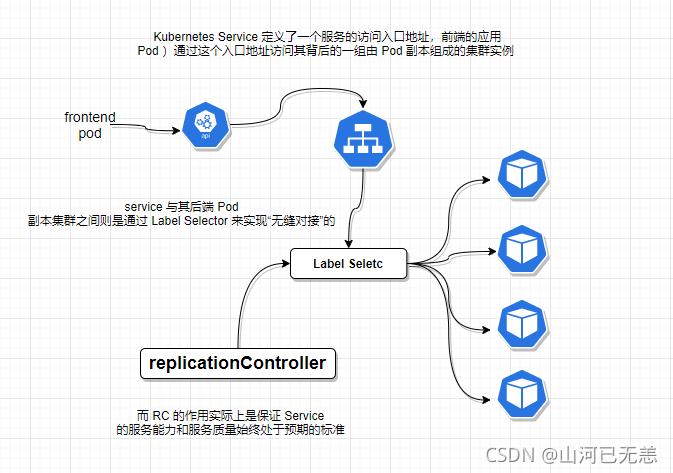

ReplicationController (RC) ReplicationController 确保在任何时候都有特定数量的 Pod 副本处于运行状态。 换句话说,ReplicationController 确保一个 Pod 或一组同类的 Pod 总是可用的。

推荐使用配置 ReplicaSet 的 Deployment 来建立副本管理机制。RC是一个很古老的资源控制器,现在一般不怎么使用,作为了解,和deploy很的相似。

ReplicationController 如何工作 当 Pod 数量过多时,ReplicationController 会终止多余的 Pod。当 Pod 数量太少时,ReplicationController 将会启动新的 Pod。 与手动创建的 Pod 不同,由 ReplicationController 创建的 Pod 在失败、被删除或被终止时会被自动替换。 例如,在中断性维护(如内核升级)之后,你的 Pod 会在节点上重新创建。 因此,即使你的应用程序只需要一个 Pod,你也应该使用 ReplicationController 创建 Pod。 ReplicationController 类似于进程管理器,但是 ReplicationController 不是监控单个节点上的单个进程,而是监控跨多个节点的多个 Pod。

ReplicationController 的替代方案 ReplicaSet ReplicaSet 是下一代 ReplicationController, 支持新的基于集合的标签选择算符。 它主要被 Deployment 用来作为一种编排 Pod 创建、删除及更新的机制。 请注意,我们推荐使用 Deployment 而不是直接使用 ReplicaSet,除非 你需要自定义更新编排或根本不需要更新。

Deployment 是一种更高级别的 API 对象, 它以类似于 kubectl rolling-update 的方式更新其底层 ReplicaSet 及其 Pod。 如果你想要这种滚动更新功能,那么推荐使用 Deployment,因为与 kubectl rolling-update 不同, 它们是声明式的、服务端的,并且具有其它特性。

创建一个RC 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 apiVersion: v1 kind: ReplicationController metadata: name: nginxrc spec: replicas: 2 selector: app: nginx template: metadata: name: nginx labels: app: nginx spec: containers: - image: nginx name: web resources: requests: cpu: 100m restartPolicy: Always

1 2 3 4 5 6 7 8 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl apply -f rc.yaml replicationcontroller/nginxrc created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxrc-5szqd 0/1 ContainerCreating 0 15s nginxrc-tstxl 1/1 Running 0 15s

修改RC副本数

1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl scale rc nginxrc --replicas=5 replicationcontroller/nginxrc scaled ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxrc-5szqd 1/1 Running 0 84s nginxrc-6ptpt 0/1 ContainerCreating 0 3s nginxrc-pd6qw 0/1 ContainerCreating 0 3s nginxrc-tntbd 0/1 ContainerCreating 0 3s nginxrc-tstxl 1/1 Running 0 84s

删除RC

1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl delete -f rc.yaml replicationcontroller "nginxrc" deleted ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl get pods NAME READY STATUS RESTARTS AGE nginxrc-5szqd 1/1 Terminating 0 110s nginxrc-6ptpt 1/1 Terminating 0 29s nginxrc-pd6qw 1/1 Terminating 0 29s nginxrc-tntbd 1/1 Terminating 0 29s nginxrc-tstxl 1/1 Terminating 0 110s

ReplicaSet(RS) ReplicaSet 的目的是维护一组在任何时候都处于运行状态的 Pod 副本的稳定集合。 因此,它通常用来保证给定数量的、完全相同的 Pod 的可用性。

ReplicaSet 的工作原理 RepicaSet 是通过一组字段来定义的,包括:

一个用来识别可获得的 Pod 的集合的选择算符(选择器)、

一个用来标明应该维护的副本个数的数值、

一个用来指定应该创建新 Pod 以满足副本个数条件时要使用的 Pod 模板等等。

每个 ReplicaSet 都通过根据需要创建和 删除 Pod 以使得副本个数达到期望值, 进而实现其存在价值。当 ReplicaSet 需要创建新的 Pod 时,会使用所提供的 Pod 模板。

ReplicaSet 通过 Pod 上的 metadata.ownerReferences 字段连接到附属 Pod,该字段给出当前对象的属主资源。 ReplicaSet 所获得的 Pod 都在其 ownerReferences 字段中包含了属主 ReplicaSet 的标识信息。正是通过这一连接,ReplicaSet 知道它所维护的 Pod 集合的状态, 并据此计划其操作行为。

ReplicaSet 使用其选择算符来辨识要获得的 Pod 集合。如果某个 Pod 没有 OwnerReference 或者其 OwnerReference 不是一个 控制器,且其匹配到 某 ReplicaSet 的选择算符,则该 Pod 立即被此 ReplicaSet 获得。

何时使用 ReplicaSet ReplicaSet 确保任何时间都有指定数量的 Pod 副本在运行。 然而,Deployment 是一个更高级的概念,它管理 ReplicaSet,并向 Pod 提供声明式的更新以及许多其他有用的功能。 因此,我们建议使用 Deployment 而不是直接使用 ReplicaSet,除非 你需要自定义更新业务流程或根本不需要更新。

创建一个RS 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 apiVersion: apps/v1 kind: ReplicaSet metadata: name: frontend labels: app: guestbook tier: frontend spec: replicas: 3 selector: matchLabels: tier: frontend template: metadata: labels: tier: frontend spec: containers: - name: nginx image: nginx

1 2 3 4 5 6 7 8 9 10 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl apply -f rs.yaml replicaset.apps/frontend created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl get pods NAME READY STATUS RESTARTS AGE frontend-8r27p 1/1 Running 0 33s frontend-lk46p 0/1 ContainerCreating 0 33s frontend-njjt2 0/1 ContainerCreating 0 33s

修改RS副本数

1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl scale rs frontend --replicas=1 replicaset.apps/frontend scaled ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$kubectl get pods NAME READY STATUS RESTARTS AGE frontend-8r27p 1/1 Running 0 60s frontend-lk46p 1/1 Terminating 0 60s frontend-njjt2 1/1 Terminating 0 60s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-ReplicationController] └─$

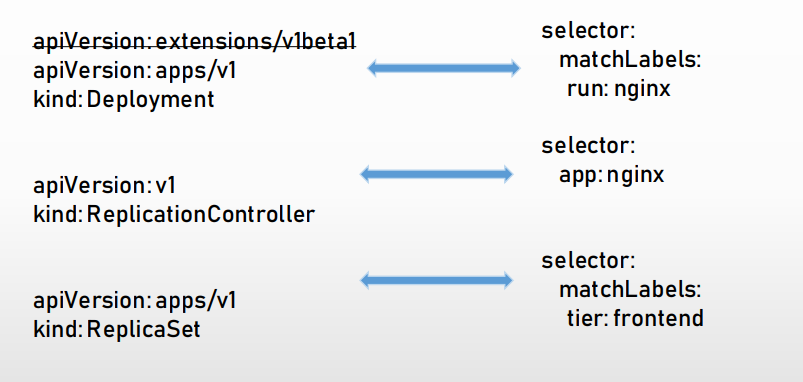

三者在胚子文件的区别

副本数的修改

1 2 3 kubectl scale deployment nginx --replicas=20 kubectl scale rs rs1 --replicas=4 kubectl scale dc nginx --replicas=20

Pod健康检查和服务可用性检查 健康检查的目的 探测的目的: 用来维持 pod的健壮性,当pod挂掉之后,deployment会生成新的pod,但如果pod是正常运行的,但pod里面出了问题,此时deployment是监测不到的。故此需要探测(probe)-pod是不是正常提供服务的

探针类似 Kubernetes 对 Pod 的健康状态可以通过两类探针来检查:LivenessProbe 和ReadinessProbe, kubelet定期执行这两类探针来诊断容器的健康状况。都是通过deployment实现的

探针类型

描述

LivenessProbe探针 用于判断容器是否存活(Running状态) ,如果LivenessProbe探针探测到容器不健康,则kubelet将杀掉该容器,并根据容器的重启策略做相应的处理。如果一个容器不包含LivenesspProbe探针,那么kubelet认为该容器的LivenessProbe探针返回的值永远是Success。

ReadinessProbe探针 用于判断容器服务是否可用(Ready状态) ,达到Ready状态的Pod才可以接收请求。对于被Service管理的Pod, Service与Pod Endpoint的关联关系也将基于Pod是否Ready进行设置。如果在运行过程中Ready状态变为False,则系统自动将其从Service的后端Endpoint列表中隔离出去,后续再把恢复到Ready状态的Pod加回后端Endpoint列表。这样就能保证客户端在访问Service时不会被转发到服务不可用的Pod实例上。

检测方式及参数配置 LivenessProbe和ReadinessProbe均可配置以下三种实现方式。

方式

描述

ExecAction 在容器内部执行一个命令,如果该命令的返回码为0,则表明容器健康。

TCPSocketAction 通过容器的IP地址和端口号执行TC检查,如果能够建立TCP连接,则表明容器健康。

HTTPGetAction 通过容器的IP地址、端口号及路径调用HTTP Get方法,如果响应的状态码大于等于200且小于400,则认为容器健康。

对于每种探测方式,需要设置initialDelaySeconds和timeoutSeconds等参数,它们的含义分别如下。

参数

描述

initialDelaySeconds: 启动容器后进行首次健康检查的等待时间,单位为s。

timeoutSeconds: 健康检查发送请求后等待响应的超时时间,单位为s。当超时发生时, kubelet会认为容器已经无法提供服务,将会重启该容器。

periodSeconds 执行探测的频率,默认是10秒,最小1秒。

successThreshold 探测失败后,最少连续探测成功多少次才被认定为成功,默认是1,对于liveness必须是1,最小值是1。

failureThreshold 当 Pod 启动了并且探测到失败,Kubernetes 的重试次数。存活探测情况下的放弃就意味着重新启动容器。就绪探测情况下的放弃 Pod 会被打上未就绪的标签。默认值是 3。最小值是 1

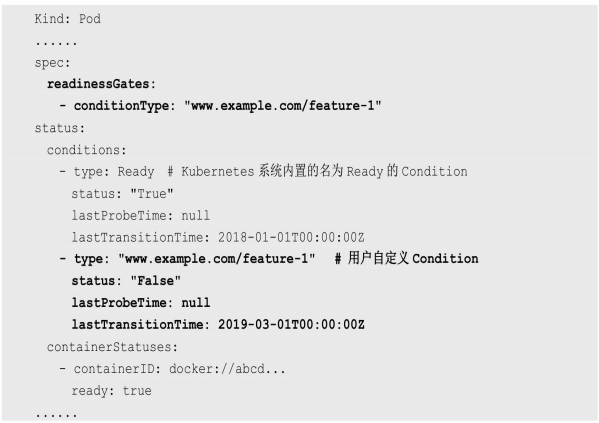

Kubernetes的ReadinessProbe机制可能无法满足某些复杂应用对容器内服务可用状态的判断

所以Kubernetes从1.11版本开始,引入PodReady++特性对Readiness探测机制进行扩展,在1.14版本时达到GA稳定版,称其为Pod Readiness Gates。

通过Pod Readiness Gates机制,用户可以将自定义的ReadinessProbe探测方式设置在Pod上,辅助Kubernetes设置Pod何时达到服务可用状态(Ready) 。为了使自定义的ReadinessProbe生效,用户需要提供一个外部的控制器(Controller)来设置相应的Condition状态。

Pod的Readiness Gates在Pod定义中的ReadinessGate字段进行设置。下面的例子设置了一个类型为www.example.com/feature-1的新ReadinessGate :

–

新增的自定义Condition的状态(status)将由用户自定义的外部控·制器设置,默认值为False. Kubernetes将在判断全部readinessGates条件都为True时,才设置Pod为服务可用状态(Ready为True) 。

这个不是太懂,需要以后再研究下

学习环境准备 1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$mkdir liveness-probe ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cd liveness-probe/ ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl create ns liveness-probe namespace/liveness-probe created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl config current-context kubernetes-admin@kubernetes ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl config set-context $(kubectl config current-context) --namespace=liveness-probe Context "kubernetes-admin@kubernetes" modified.

LivenessProbe探针 用于判断容器是否存活(Running状态) ,如果LivenessProbe探针探测到容器不健康,则kubelet将杀掉该容器,并根据容器的重启策略做相应的处理

ExecAction方式:command 在容器内部执行一个命令,如果该命令的返回码为0,则表明容器健康。

资源文件定义

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$cat liveness-probe.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-liveness name: pod-liveness spec: containers: - args: - /bin/sh - -c - touch /tmp/healthy; sleep 30 ; rm -rf /tmp/healthy; slee 10 livenessProbe: exec: command: - cat - /tmp/healthy initialDelaySeconds: 5 periodSeconds: 5 image: busybox imagePullPolicy: IfNotPresent name: pod-liveness resources: {} dnsPolicy: ClusterFirst restartPolicy: Always status: {}

运行这个deploy。当pod创建成功后,新建文件,并睡眠30s,删掉文件在睡眠。使用liveness检测文件的存在

1 2 3 4 5 6 7 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl apply -f liveness-probe.yaml pod/pod-liveness created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-liveness 1/1 Running 1 (8s ago) 41s

运行超过30s后。文件被删除,所以被健康检测命中,pod根据重启策略重启

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-liveness 1/1 Running 2 (34s ago) 99s

99s后已经从起了第二次

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible 192.168.26.83 -m shell -a "docker ps | grep pod-liveness" 192.168.26.83 | CHANGED | rc=0 >> 00f4182c014e 7138284460ff "/bin/sh -c 'touch /…" 6 seconds ago Up 5 seconds k8s_pod-liveness_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_0 01c5cfa02d8c registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 7 seconds ago Up 6 seconds k8s_POD_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_0 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-liveness 1/1 Running 0 25s ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-liveness 1/1 Running 1 (12s ago) 44s ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible 192.168.26.83 -m shell -a "docker ps | grep pod-liveness" 192.168.26.83 | CHANGED | rc=0 >> 1eafd7e8a12a 7138284460ff "/bin/sh -c 'touch /…" 15 seconds ago Up 14 seconds k8s_pod-liveness_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_1 01c5cfa02d8c registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 47 seconds ago Up 47 seconds k8s_POD_pod-liveness_liveness-probe_81b4b086-fb28-4657-93d0-bd23e67f980a_0 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

查看节点机docker中的容器ID,前后不一样,确定是POD被杀掉后重启。

HTTPGetAction的方式 通过容器的IP地址、端口号及路径调用HTTP Get方法,如果响应的状态码大于等于200且小于400,则认为容器健康。 创建资源文件,即相关参数使用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$cat liveness-probe-http.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-livenss-probe name: pod-livenss-probe spec: containers: - image: nginx imagePullPolicy: IfNotPresent name: pod-livenss-probe livenessProbe: failureThreshold: 3 httpGet: path: /index.html port: 80 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10 resources: {} dnsPolicy: ClusterFirst restartPolicy: Always status: {}

运行deploy,这个的探测机制访问Ngixn的默认欢迎页

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$vim liveness-probe-http.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl apply -f liveness-probe-http.yaml pod/pod-livenss-probe created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-livenss-probe 1/1 Running 0 15s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it pod-livenss-probe -- rm /usr/share/nginx/html/index.html ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-livenss-probe 1/1 Running 1 (1s ago) 2m31s

当欢迎页被删除时,访问报错,被检测命中,pod重启

TCPSocketAction方式 通过容器的IP地址和端口号执行TCP检查,如果能够建立TCP连接,则表明容器健康。 资源文件定义

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$cat liveness-probe-tcp.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-livenss-probe name: pod-livenss-probe spec: containers: - image: nginx imagePullPolicy: IfNotPresent name: pod-livenss-probe livenessProbe: failureThreshold: 3 tcpSocket: port: 8080 initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 10 resources: {} dnsPolicy: ClusterFirst restartPolicy: Always status: {}

访问8080端口,但是8080端口未开放,所以访问会超时,不能建立连接,命中检测,重启Pod

1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl apply -f liveness-probe-tcp.yaml pod/pod-livenss-probe created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-livenss-probe 1/1 Running 0 8s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods NAME READY STATUS RESTARTS AGE pod-livenss-probe 1/1 Running 1 (4s ago) 44s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$

ReadinessProbe探针 用于判断容器服务是否可用(Ready状态) ,达到Ready状态的Pod才可以接收请求。负责不能进行访问

ExecAction方式:command 资源文件定义,使用钩子建好需要检查的文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$cat readiness-probe.yaml apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: pod-liveness name: pod-liveness spec: containers: - readinessProbe: exec: command: - cat - /tmp/healthy initialDelaySeconds: 5 periodSeconds: 5 image: nginx imagePullPolicy: IfNotPresent name: pod-liveness resources: {} lifecycle: postStart: exec: command: ["/bin/sh" , "-c" ,"touch /tmp/healthy" ] dnsPolicy: ClusterFirst restartPolicy: Always status: {}

创建3个有Ngixn的pod,通过POD创建一个SVC做测试用

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$sed 's/pod-liveness/pod-liveness-1/' readiness-probe.yaml | kubectl apply -f - pod/pod-liveness-1 created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$sed 's/pod-liveness/pod-liveness-2/' readiness-probe.yaml | kubectl apply -f - pod/pod-liveness-2 created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod-liveness 1/1 Running 0 3m1s 10.244.70.50 vms83.liruilongs.github.io <none> <none> pod-liveness-1 1/1 Running 0 2m 10.244.70.51 vms83.liruilongs.github.io <none> <none> pod-liveness-2 1/1 Running 0 111s 10.244.70.52 vms83.liruilongs.github.io <none> <none>

修改主页文字

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$serve =pod-liveness ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html" ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it $serve -- sh -c "cat /usr/share/nginx/html/index.html" pod-liveness ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$serve =pod-liveness-1 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html" ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$serve =pod-liveness-2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it $serve -- sh -c "echo $serve > /usr/share/nginx/html/index.html" ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$

修改标签

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-liveness 1/1 Running 0 15m run=pod-liveness pod-liveness-1 1/1 Running 0 14m run=pod-liveness-1 pod-liveness-2 1/1 Running 0 14m run=pod-liveness-2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl edit pods pod-liveness-1 pod/pod-liveness-1 edited ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl edit pods pod-liveness-2 pod/pod-liveness-2 edited ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS pod-liveness 1/1 Running 0 17m run=pod-liveness pod-liveness-1 1/1 Running 0 16m run=pod-liveness pod-liveness-2 1/1 Running 0 16m run=pod-liveness ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$

要删除文件检测

1 2 3 4 5 6 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it pod-liveness -- ls /tmp/ healthy ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl exec -it pod-liveness-1 -- ls /tmp/ healthy

使用POD创建SVC

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl expose --name=svc pod pod-liveness --port=80 service/svc exposed ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get ep NAME ENDPOINTS AGE svc 10.244.70.50:80,10.244.70.51:80,10.244.70.52:80 16s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc ClusterIP 10.104.246.121 <none> 80/TCP 36s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/liveness-probe] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod-liveness 1/1 Running 0 24m 10.244.70.50 vms83.liruilongs.github.io <none> <none> pod-liveness-1 1/1 Running 0 23m 10.244.70.51 vms83.liruilongs.github.io <none> <none> pod-liveness-2 1/1 Running 0 23m 10.244.70.52 vms83.liruilongs.github.io <none> <none>

测试SVC正常,三个POD会正常 负载

1 2 3 4 5 6 7 8 9 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$while true ; do curl 10.104.246.121 ; sleep 1 > done pod-liveness pod-liveness-2 pod-liveness pod-liveness-1 pod-liveness-2 ^C

删除文件测试

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl exec -it pod-liveness -- rm -rf /tmp/ ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl exec -it pod-liveness -- ls /tmp/ ls: cannot access '/tmp/' : No such file or directory command terminated with exit code 2┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$while true ; do curl 10.104.246.121 ; sleep 1; done pod-liveness-2 pod-liveness-2 pod-liveness-2 pod-liveness-1 pod-liveness-2 pod-liveness-2 pod-liveness-1 ^C

会发现pod-liveness的pod已经不提供服务了

kubeadm 中的一些健康检测 kube-apiserver.yaml中的使用,两种探针同时使用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep -A 8 readi readinessProbe: failureThreshold: 3 httpGet: host: 192.168.26.81 path: /readyz port: 6443 scheme: HTTPS periodSeconds: 1 timeoutSeconds: 15 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep -A 9 liveness livenessProbe: failureThreshold: 8 httpGet: host: 192.168.26.81 path: /livez port: 6443 scheme: HTTPS initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 15 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

job&cronjob Job:批处理调度 Kubernetes从1.2版本开始支持批处理类型的应用,我们可以通过Kubernetes Job资源对象来定义并启动一个批处理任务。

批处理任务通常并行(或者串行)启动多个计算进程去处理一批工作项(work item)处理完成后,整个批处理任务结束。

K8s官网中这样描述 : Job 会创建一个或者多个 Pods,并将继续重试 Pods 的执行,直到指定数量的 Pods 成功终止。 随着 Pods 成功结束,Job 跟踪记录成功完成的 Pods 个数。 当数量达到指定的成功个数阈值时,任务(即 Job)结束。 删除 Job 的操作会清除所创建的全部 Pods。 挂起 Job 的操作会删除 Job 的所有活跃 Pod,直到 Job 被再次恢复执行。

一种简单的使用场景下,你会创建一个 Job 对象以便以一种可靠的方式运行某 Pod 直到完成。 当第一个 Pod 失败或者被删除(比如因为节点硬件失效或者重启)时,Job 对象会启动一个新的 Pod。也可以使用 Job 以并行的方式运行多个 Pod。

考虑到批处理的并行问题, Kubernetes将Job分以下三种类型。

类型

描述

Non-parallel Jobs 通常一个Job只启动一个Pod,除非Pod异常,才会重启该Pod,一旦此Pod正常结束, Job将结束。

Parallel Jobs with a fixed completion count 并行Job会启动多个Pod,此时需要设定Job的.spec.completions参数为一个正数,当正常结束的Pod数量达至此参数设定的值后, Job结束。此外, Job的.spec.parallelism参数用来控制并行度,即同时启动几个Job来处理Work Item.

Parallel Jobs with a work queue 任务队列方式的并行Job需要一个独立的Queue, Work item都在一个Queue中存放,不能设置Job的.spec.completions参数,此时Job有以下特性。

嗯,我们就第一个,第二搞一个Demo,第三中之后有时间搞,其实就是资源配置参数的问题 环境准备

1 2 3 4 5 6 7 8 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl config set-context $(kubectl config current-context) --namespace=liruiling-job-create Context "kubernetes-admin@kubernetes" modified. ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl create ns liruiling-job-create namespace/liruiling-job-create created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$vim myjob.yaml

创建一个job 创建一个Job,执行echo "hello jobs"

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$cat myjob.yaml apiVersion: batch/v1 kind: Job metadata: creationTimestamp: null name: my-job spec: template: metadata: creationTimestamp: null spec: containers: - command : - sh - -c - echo "hello jobs" - sleep 15 image: busybox name: my-job resources: {} restartPolicy: Never status: {}

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl apply -f myjob.yaml job.batch/my-job created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE my-job--1-jdzqd 0/1 ContainerCreating 0 7s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get jobs NAME COMPLETIONS DURATION AGE my-job 0/1 17s 17s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE my-job--1-jdzqd 0/1 Completed 0 24s

STATUS 状态变成 Completed意味着执行成功,查看日志

1 2 3 4 5 6 7 8 9 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get jobs NAME COMPLETIONS DURATION AGE my-job 1/1 19s 46s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl logs my-job--1-jdzqd hello jobs ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$

job的配置参数解析 job的restart策略

Nerver 只要任务没有完成,则是新创建pod运行,直到job完成 会产生多个podOnFailure 只要pod没有完成,则会重启pod,直到job完成

activeDeadlineSeconds:最大可以运行时间

1 2 3 4 5 6 7 8 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl explain jobs.spec | grep act activeDeadlineSeconds <integer > may be continuously active before the system tries to terminate it; value given time. The actual number of pods running in steady state will be less false to true ), the Job controller will delete all active Pods associated ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$vim myjobact.yaml

使用activeDeadlineSeconds:最大可以运行时间创建一个job

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$cat myjobact.yaml apiVersion: batch/v1 kind: Job metadata: creationTimestamp: null name: my-job spec: template: metadata: creationTimestamp: null spec: activeDeadlineSeconds: 5 containers: - command : - sh - -c - echo "hello jobs" - sleep 15 image: busybox name: my-job resources: {} restartPolicy: Never status: {}

超过5秒任务没有完成,所以从新创建一个pod运行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl delete -f myjob.yaml job.batch "my-job" deleted ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl apply -f myjobact.yaml job.batch/my-job created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE my-job--1-ddhbj 0/1 ContainerCreating 0 7s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get jobs NAME COMPLETIONS DURATION AGE my-job 0/1 16s 16s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE my-job--1-ddhbj 0/1 Completed 0 23s my-job--1-mzw2p 0/1 ContainerCreating 0 3s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE my-job--1-ddhbj 0/1 Completed 0 48s my-job--1-mzw2p 0/1 Completed 0 28s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get jobs NAME COMPLETIONS DURATION AGE my-job 0/1 55s 55s

其他的一些参数

parallelism: N 一次性运行N个pod completions: M job结束需要成功运行的Pod个数,即状态为Completed的pod数 backoffLimit: N 如果job失败,则重试几次 parallelism:一次性运行几个pod,这个值不会超过completions的值。

创建一个并行多任务的Job 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 apiVersion: batch/v1 kind: Job metadata: creationTimestamp: null name: my-job spec: backoffLimit: 6 completions: 6 parallelism: 2 template: metadata: creationTimestamp: null spec: containers: - command: - sh - -c - echo "hello jobs" - sleep 15 image: busybox name: my-job resources: {} restartPolicy: Never status: {}

创建一个有参数的job

1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl apply -f myjob-parma.yaml job.batch/my-job created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get pods jobs Error from server (NotFound): pods "jobs" not found ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get pods job Error from server (NotFound): pods "job" not found ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get jobs NAME COMPLETIONS DURATION AGE my-job 0/6 19s 19s

查看参数设置的变化,运行6个job

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE my-job--1-9vvst 0/1 Completed 0 25s my-job--1-h24cw 0/1 ContainerCreating 0 5s my-job--1-jgq2j 0/1 Completed 0 24s my-job--1-mbmg6 0/1 ContainerCreating 0 1s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get jobs NAME COMPLETIONS DURATION AGE my-job 2/6 35s 35s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get jobs NAME COMPLETIONS DURATION AGE my-job 3/6 48s 48s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$ └─$kubectl get pods NAME READY STATUS RESTARTS AGE my-job--1-9vvst 0/1 Completed 0 91s my-job--1-b95qv 0/1 Completed 0 35s my-job--1-h24cw 0/1 Completed 0 71s my-job--1-jgq2j 0/1 Completed 0 90s my-job--1-mbmg6 0/1 Completed 0 67s my-job--1-njbfj 0/1 Completed 0 49s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get jobs NAME COMPLETIONS DURATION AGE my-job 6/6 76s 93s

实战:计算圆周率2000位 命令行的方式创建一个job

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl create job job3 --image=perl --dry-run=client -o yaml -- perl -Mbignum=bpi -wle 'print bpi(500)' apiVersion: batch/v1 kind: Job metadata: creationTimestamp: null name: job3 spec: template: metadata: creationTimestamp: null spec: containers: - command : - perl - -Mbignum=bpi - -wle - print bpi(500) image: perl name: job3 resources: {} restartPolicy: Never status: {}

拉取相关镜像,命令行创建job

1 2 3 4 5 6 7 8 9 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible node -m shell -a "docker pull perl" ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$kubectl create job job2 --image=perl -- perl -Mbignum=bpi -wle 'print bpi(500)' job.batch/job2 created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-jobs-create] └─$kubectl get pods NAME READY STATUS RESTARTS AGE job2--1-5jlbl 0/1 Completed 0 2m4s

查看运行的job输出